1. Overview¶

The BEAT platform can be used to explore and reproduce experiments in machine learning and pattern recognition. One of major goals of the BEAT platform is re-usability and reproducibility of research experiments. The BEAT platform uses several mechanisms to ensure that each experiment is reproducible and experiments share parts with each other as much as possible. For example in a simple experiment, the database, the algorithm, and the environment used to run the experiment can be shared between experiments.

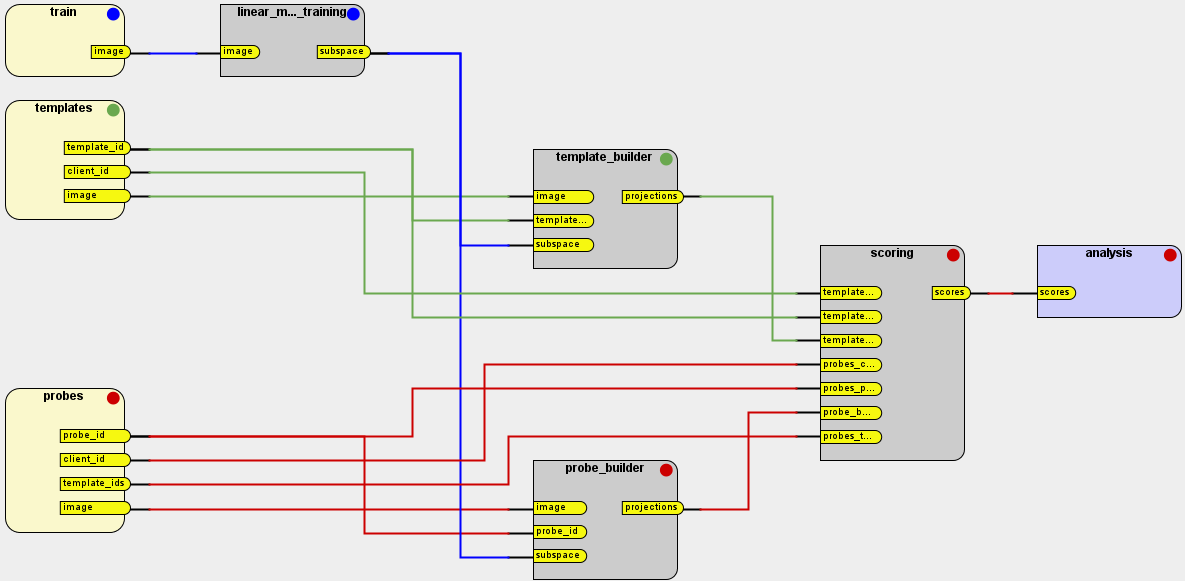

A fundamental part of the Experiments in the BEAT platform is a toolchain. You can see an example of a toolchain below:

Toolchains are sequences of blocks and links (like a block diagram) which represent the data flow on an experiment. Once you have defined the toolchain against which you’d like to run, the experiment can be further configured by assigning different datasets, algorithms and analyzers to the different toolchain blocks.

The data that circulates at each toolchain connection in a configured

experiment is formally defined using Data Formats. The platform

experiment configuration system will prevent you from using incompatible

data formats between connecting blocks. For example, in the toolchain depicted

above, if one decides to use a dataset on the block train (top-left of the

diagram) that yields images, the subsequent block cannot contain an algorithm

that can only treat audio signals. Once the dataset on the block train has

been chosen, the platform will not offer you incompatible algorithms to be

placed on the following regular block linear_machine_training - only

compatible algorithms.

You can create your own experiments and develop your own algorithms or change existing ones. An experiment usually consists of three main bits: the toolchain, datasets, and algorithms.

The datasets are provided system resources offered by the BEAT platform instance you are using. Normal users cannot create or change available datasets in the platform (see our Frequently Asked Questions). Please refer to Frequently Asked Questions for more information.

You can learn about how to develop new algorithms or change existing ones by looking at our Algorithms section. A special kind of algorithms are result analyzers that are used to generate the results of the experiments.

You can check the results of an experiment once it finishes and share the results of your experiments with other people or Teams using our sharing interface. Moreover, you can request for attestation (refer to Attestations) of your experiment which ensures it reproducible and that its details cannot be changed anymore. This is done by locking the resources that were used in your experiment.

Reports are like macro-Attestations. You can lock several experiments together generate a selected list of tables and figures you can re-use on your scientific publications. Reports can be used to gather results of different experiments of one particular publication in one page so that you can share it with others with a single link.

Experiments and almost any other resources can be copied (or forked in BEAT-parlance) ensure maximum re-usability and reproducibility. Users can fork different resources change them and use them in their own experiments. In the Search section you can learn about how you can search for different resources available to you on the BEAT platform.

You can also use our search feature to create leaderboards. Leaderboards are stored searches of experiment results with certain criteria and filters. The experiment results are sorted by one of the available criteria of your choosing (e.g. a performance indicator that you consider valuable). Leaderboards are regularly updated by the platform machinery and, as soon as it changes, you will be notified (consult our section on User Settings for information on how to enable or disable e-mail notifications). This helps you to keep track of your favorite machine learning or pattern recognition tasks and datasets.