No artificial intelligence without full mastery of maths and statistics

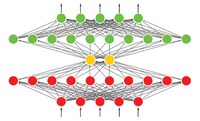

Nowadays, good or bad, artificial intelligence is seen as a leading-edge technology and terms like neural networks are often synonyms for state-of-the-art achievements. This trend is nothing new. Back in the 80’s, researchers in the—at the time—new field of digital audio and imaging were hoping to use specific neural network architectures, called autoencoders, to encode and transmit relevant information conveyed by a signal. This process seemed to be a promising approach not only to transmit such information, but also to potentially increase the quality of the output (decoded) signal.

In 1988, Hervé Bourlard, head of Idiap’s Speech & audio processing research group, and his colleague at the time, Y. Kamp, published a milestone paper in this field. They demonstrated that the encoding approach using neural networks could be performed as well using a well-known mathematical tool from linear algebra. This discovery not only explained how neural network autoencoders work, but it also put an end to some of the speculations that autoencoder neural networks can extract ex nihilo some key features of the input signal.

Future improvements

Over three decades later, autoencoders are not dead. They are used in many domains ranging from facial recognition to image processing. Therefore, Hervé Bourlard and his colleague Selen Hande Kabil decided to pursue the work of the paper published in 1988.

They explored the initial mathematical approach by extending it to a different commonly used technique with more complex autoencoder architectures, as well as to another kind of signal called discrete inputs. Thus extending this work is particularly relevant, as autoencoders are now extensively used in the domain of discrete inputs like in natural language processing. In this case, the aim is often to analyse the semantic proximity between words in a given context. As meanings associated to words are discrete inputs by nature, the ability to characterize mathematically a “distance” between words is crucial to determine how they are related.

Further analysing the initial findings to these new, more complex architectures, on much larger datsets—not available in 1998—, researchers confirmed again that linear algebra is often yieding optimal solutions or, at least, providing means to better understand and improve neural networks. “This is a small but crucial step towards more explainable AI, as data processing by these technologies often constitutes a black box. This lack of ability to understand the process undermines the trust in the final results,” Bourlard explains.

Beyond the fact that this publication cements the theory of neural networks by analysing their functionning, the researcher hopes that this mathematical approach will inspire the next generation of scientists to question the tools used to conduct their research. Furthermore, “If using maths and statistics is an alternative to energy-intensive autoencoders for certain tasks, there is a potential for less energy demanding solutions,” Bourlard concludes.

More information

- Speech & audio processing research group

- “Autoencoders reloaded”, Hervé Bourlard & Selen Hande Kabil, 2022

- “Auto-association by multilayer perceptrons and singular value decomposition”, by Hervé Bourlard, Y. Kamp, in Biol Cybern, 1988