Custom Silicone Mask Attack Dataset (CSMAD)

Description

The Custom Silicone Mask Attack Dataset (CSMAD) contains presentation attacks made of six custom-made silicone masks. Each mask cost about USD 4000. The dataset is designed for face presentation attack detection experiments.

The Custom Silicone Mask Attack Dataset (CSMAD) has been collected at the Idiap Research Institute. It is intended for face presentation attack detection experiments, where the presentation attacks have been mounted using a custom-made silicone mask of the person (or identity) being attacked.

The dataset contains videos of face-presentations, as a set of files specifying the experimental protocol corresponding the experiments presented in the corresponding publication.

Reference

If you publish results using this dataset, please cite the following publication.

Sushil Bhattacharjee, Amir Mohammadi and Sebastien Marcel: "Spoofing Deep Face Recognition With Custom Silicone Masks." in Proceedings of International Conference on Biometrics: Theory, Applications, and Systems (BTAS), 2018.

10.1109/BTAS.2018.8698550

http://publications.idiap.ch/index.php/publications/show/3887

Data Collection

Face-biometric data has been collected from 14 subjects to create this dataset. Subjects participating in this data-collection have played three roles: targets, attackers, and bona-fide clients. The subjects represented in the dataset are referred to here with letter-codes: A .. N. The subjects A..F have also been targets. That is, face-data for these six subjects has been used to construct their corresponding flexible masks (made of silicone). These masks have been made by Nimba Creations Ltd., a special effects company.

Bona fide presentations have been recorded for all subjects A..N. Attack presentations (presentations where the subject wears one of 6 masks) have been recorded for all six targets, made by different subjects. That is, each target has been attacked several times, each time by a different attacker wearing the mask in question. This is one way of increasing the variability in the dataset. Another way we have augmented the variability of the dataset is by capturing presentations under different illumination conditions. Presentations have been captured in four different lighting conditions:

- flourescent ceiling light only

- halogen lamp illuminating from the left of the subject only

- halogen lamp illuminating from the right only

- both halogen lamps illuminating from both sides simultaneously

All presentations have been captured with a green uniform background. See the paper mentioned above for more details of the data-collection process.

Dataset Structure

The dataset is organized in three subdirectories: ‘attack’, ‘bonafide’, ‘protocols’. The two directories: ‘attack’ and ‘bonafide’ contain presentation-videos and still images for attacks and bona fide presentations, respectively. The folder ‘protocols’ contains text files specifying the experimental protocol for vulnerability analysis of face-recognition (FR) systems.

The number of data-files per category are as follows:

- ‘bonafide’: 87 videos, and 17 still images (in .JPG format). The still images are frontal face images captured using a Nikon Coolpix digital camera.

- ‘attack’: 159, organized in two sub-folders – ‘WEAR’ (108 videos), and ‘STAND’ (51 videos)

The folder ‘attack/WEAR’ contains videos where the attack has been made by a person (attacker) wearing the mask of the target being attacked. The ‘attack/STAND’ folder contains videos where the attack has been made using a the target’s mask mounted on an appropriate stand.

Video File Format

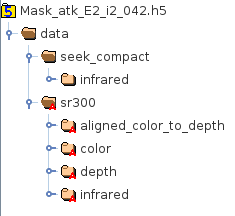

The video files for the face-presentations are in ‘hdf5’ format (with file-extensions ‘.h5’. The folder structure of the hdf5 file is shown in Figure 1. Each file contains data collected using two cameras:

- RealSense SR300 (from Intel): collects images/videos in visible-light (RGB color) , near infrared (NIR) @ 860nm wavelength, and depth maps

- Compact Pro (from Seek Thermal): collects thermal (long-wave infrared (LWIR)) images.

As shown in Figure 1, frames from the different channels (color, infrared, depth, thermal) from he two cameras are stored in separate directory-hierarchies in the hdf5 file. Each file respresents a video of approximately 10 seconds, or roughly, 300 frames.

In the hdf5 file, the directory for SR300 also contains a subdirectory named ‘aligned_color_to_depth’. This folder contains post-processed data, where the frames of depth channel have been aligned with those of the color channel based on the time-stamps of the frames.

Figure 1: File structure of hdf5 video files in this dataset.

Experimental Protocol

The ‘protocols’ folder contains text files that specify the protocols for vulnerability analysis experiments reported in the paper mentioned above. Please see the README file in the protocols folder for details.