How to adapt your pretrained models for ASR?

With growing popularity of self-supervised pretraining, a number of approaches based on auto-encoding and contrastive learning have now been proposed for Speech signal. However, it is not clear which techniques provide the most gains for speech recognition on low resource languages. Or how to go about adapting these pretrained models for best performance. Is it CTC or LFMMI that provides most gains?

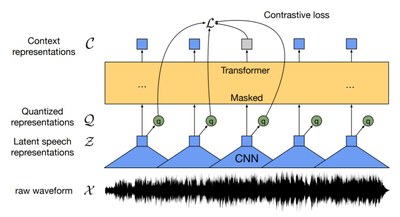

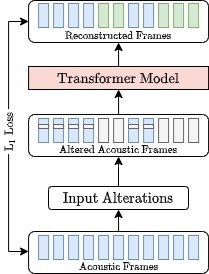

The speech group researchers at Idiap examine these choices for two popular self-supervised pretraining approaches: (1) Masked Acoustic Modeling that is based on reconstructing clean audio from corrupted audio input and (2) Wav2vec 2.0 that uses contrastive learning to learn powerful speech representations. They compare two popular losses CTC and LFMMI for out-of-domain and cross-lingual adaptation of the pretrained networks in low resource settings. Their findings suggest that wav2vec 2.0 pretraining provides most gains in every setting to significantly outperform models that do not use pretraining. Moreover, both CTC and LFMMI perform equally well for adaptation.

For more information and details please find the research reports at:

1. Lattice-Free MMI adaptation of self-supervised pretrained acoustic models

2. Comparing CTC and LFMMI for out-of-domain adaptation of wav2vec 2.0 acoustic model

|

|

|

| (a) Masked Acoutic Modeling | (b) Wav2vec 2.0 Training (taken from paper) |

Figure (a) shows the reconstruction based Masked Acoustic Modeling pretraining and (b) shows the contrastive training approach for Wav2vec 2.0.