Can sparsity be our friend? Challenges in learning meaningful representations from speech

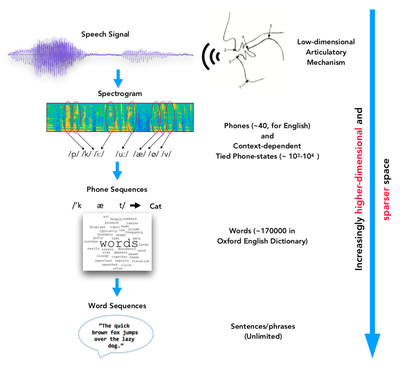

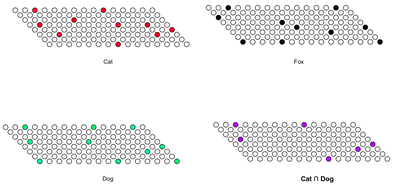

Nowadays, deep neural networks are used in many applications and often consist of black boxes which makes them blind to the problem in hand. However, we aim at creating smart neural networks attaining from the knowledge of speech modeling and neuroscience. Due to the intrinsic organization of human languages, speech is the end product of a hierarchical (e.g. union of words formulating one utterance) and parsimonious (e.g. only one word being spoken at a given time instance out of thousands of words) process. As illustrated in Figure 1, once we move higher in the hierarchy, the representation space becomes increasingly higher dimensional and sparse. Accordingly, we design neural networks that learn high dimensional sparse representations, focusing on their inherent benefits. High dimensional representations help to distribute information among different dimensions with each dimension being responsible for covering particular latent patterns of speech. In addition, sparsity promotes the disentanglement of these patterns, and hence plays an important role for obtaining meaningful representations. This is illustrated in Figure 2 where the set of circles stands for the high dimensional sparse representation space with each unit circle denoting one single dimension. Every red circle covers a latent pattern which collectively stands for the concept “cat”. The fact that some units overlap means that certain latent patterns are shared by the content of “cat” and “dog”. Thanks to the presence of sparsity, this behaviour is easily detectable and also exploitable for unseen data samples.

The research challenge lies in managing the neural network dynamics such that patterns in the data can be learned in a distributed manner while imposition of sparsity should not limit the representational capacity. This work is supported by the Swiss National Science Foundation under the project “Sparse and HIerarchical Structures for Speech Modeling” (SHISSM).

Figure 1: Parsimonious hierarchical nature of human speech. Figure adapted from (Dighe, 2019).

Figure 2: High(er) dimensional sparse representations. The set of circles denotes the representation space where each unit circle stands for the single dimension in the representation space.