Exposés distingués

Note: Les sessions se déroulent exclusivement en anglais.

Skill Learning from Human Demonstration for Autonomous and Collaborative Humanoid Robotics

Prof Fei Chen

June 24, 2025, 10:00

Abstract: Humans possess remarkable abilities to collaborate physically by adapting their actions to the change of environments, movements of their partners and the demands of the tasks. Harnessing these human capabilities for autonomous and collaborative manipulation tasks in humanoid robots offers transformative potential for advancing embodied intelligence in humanoid robotics. This research introduces an innovative skill learning framework designed to enable humanoid robots, particularly dual-arm systems, to learn and perform complex autonomous and collaborative manipulation tasks from human demonstrations. The framework integrates multi-modal human data, including motion trajectories and electromyography signals, to extract key parameters such as limb impedance and adaptive strategies, ensuring precise execution of autonomous and collaborative tasks. It can also model human interaction strategies, enabling robots to dynamically adapt to human partners during cooperative operations such as object transportation and handling. Validation through diverse tasks, including stir-frying and Tai Chi, underscores the framework’s societal impact and its contribution to enhancing embodied artificial intelligence.

Bio: Dr. Fei Chen is assistant professor of robotics at the Chinese University of Hong Kong. He has participated in the European Robotics Challenge and led several EU and Italian national projects, including EU FP7 EUROC-AutoMAP, Italian National Project MIUR VINUM, EU H2020 Chist-Era Learn-Real, and the Italy-Japan International Cooperation Project Learn-Assist. Since joining CUHK, he has been focusing on the research on humanoid robot manipulation and human-robot collaboration.

He has published over 100 papers in journals and conferences. He serves as a key member of the IEEE RAS Technical Committee on Neuro-Robotics and holds leadership positions in various prestigious robotics conferences, including Program Chair roles at IEEE ARSO 2024, IEEE RO-MAN 2024, IEEE ROBIO 2024 and Student Affair Chair for ICRA2024, Local Chairs for IROS2025. He also serves as Associate Editor for multiple IEEE journals, including IEEE Transactions on Cognitive and Developmental Systems (TCDS) and IEEE Transactions on Emerging Topics in Computational Intelligence (TETCI), while reviewing for numerous academic journals and conferences. Additionally, he chairs the IEEE Hong Kong Joint Chapter of RAS/CS and is an IEEE Senior Member.

AI For Precision Health: How To Leverage Vocal Biomarkers To Advance Health Research?

Guy Fagherazzi and Abir Elbéji

February 12, 2025

Abstract: This lecture will explore how AI can be used to develop and implement vocal biomarkers for health monitoring. Dr. Guy Fagherazzi and Dr. Abir Elbéji will present how voice analysis, powered by AI, can provide valuable insights into an individual’s health by detecting subtle changes in vocal patterns associated with symptoms or diseases. The lecture will cover the pipeline from raw voice data collection to the development of AI-driven models for screening, monitoring, and improving personalized healthcare. Practical applications, challenges, and the potential for integrating voice-based solutions into clinical settings will also be discussed.

Bio: Dr. Guy Fagherazzi is the Director of the Department of Precision Health at the Luxembourg Institute of Health (LIH) and leads the Deep Digital Phenotyping Research Unit. His research focuses on harnessing digital technologies and AI to develop innovative digital biomarkers for chronic disease monitoring, with a particular emphasis on diabetes. With a background in mathematics and epidemiology, Dr. Fagherazzi has authored over 300 scientific publications and is a recognized leader in digital health research. His work aims to advance precision health by integrating large-scale data from digital technologies, voice analysis, and digital phenotyping methods.

Dr. Abir Elbéji is a researcher specializing in the development of AI-driven vocal biomarkers for monitoring chronic disease symptoms. She is a Postdoctoral Researcher in the Deep Digital Phenotyping Research Unit at the Luxembourg Institute of Health (LIH), focusing on voice analysis to improve remote patient monitoring and personalized healthcare. Dr. Elbéji has contributed to the Colive Voice project, a global study identifying vocal biomarkers for various health conditions. Her work has led to voice-based algorithms for predicting type 2 diabetes and monitoring respiratory health. She holds an Engineering degree in Biology from INSAT, Tunisia, and a PhD from the University of Luxembourg.

How far can transformers reason? the globality barrier and inductive scratchpad

Samy Bengio, Senior Director at Apple and adjunct professor at EPFL

February 5, 2025

Abstract: Can Transformers predict new syllogisms by composing established ones? More generally, what type of targets can be learned by such models from scratch? Recent works show that Transformers can be Turing-complete in terms of expressivity, but this does not address the learnability objective. This presentation puts forward the notion of 'globality degree' to capture when weak learning is efficiently achievable by regular Transformers, where the latter measures the least number of tokens required in addition to the tokens histogram to correlate nontrivially with the target. As shown experimentally and theoretically under additional assumptions, distributions with high globality cannot be learned efficiently. In particular, syllogisms cannot be composed on long chains. Furthermore, we show that (i) an agnostic scratchpad cannot help to break the globality barrier, (ii) an educated scratchpad can help if it breaks the globality barrier at each step, (iii) a notion of 'inductive scratchpad' can both break the globality barrier and improve the out-of-distribution generalization, e.g., generalizing to almost double input size for some arithmetic tasks.

Bio: Samy Bengio (PhD in computer science, University of Montreal, 1993) is a senior director of machine learning research at Apple since 2021 and an adjunct professor at EPFL since 2024. Before that, he was a distinguished scientist at Google Research since 2007 where he was heading part of the Google Brain team, and at IDIAP in the early 2000s where he co-wrote the well-known open-source Torch machine learning library.

His research interests span many areas of machine learning such as deep architectures, representation learning, vision and language processing and more recently, reasoning.

He is action editor of the Journal of Machine Learning Research and on the board of the NeurIPS foundation. He was on the editorial board of the Machine Learning Journal, has been program chair (2017) and general chair (2018) of NeurIPS, program chair of ICLR (2015, 2016), general chair of BayLearn (2012-2015), MLMI (2004-2006), as well as NNSP (2002), and on the program committee of several international conferences such as NeurIPS, ICML, ICLR, ECML and IJCAI.

More details can be found at https://bengio.abracadoudou.com.

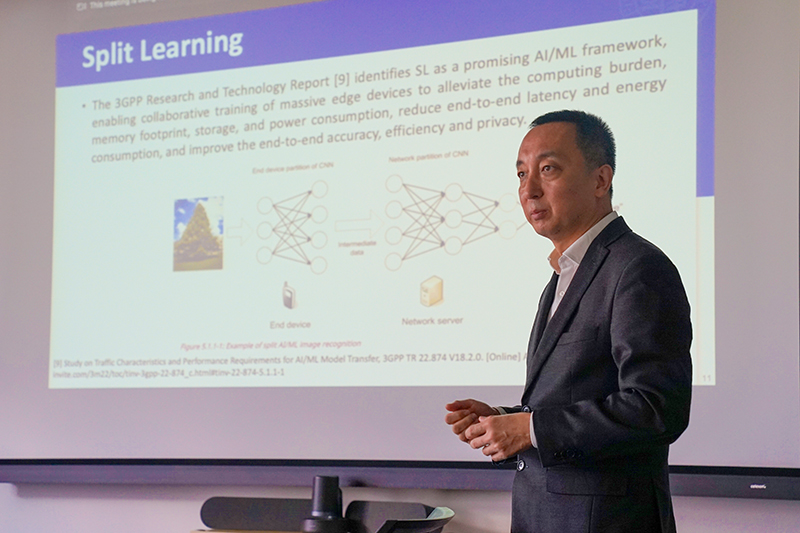

Parallel Split Learning for Wireless Networks

Yue Gao, Fudan University, China

October 11, 2024

Abstract: For wireless networks, edge intelligence is hindered from revolutionising how smartphones and base stations process and analyse data by bringing AI capabilities closer to the source of data generation. Split Learning (SL) will be introduced in this talk. This new distributed deep learning paradigm enables resource-constrained devices to offload substantial training workloads to edge servers via layer-wise model partitioning. By resorting to parallel training across multiple devices, SL addresses the latency and bandwidth challenges of traditional centralised and federated learning, ensuring efficient and privacy-preserving data processing at the edge of wireless networks. I will present our recent work on Efficient Parallel Split Learning (EPSL), designed to overcome the limitations of existing parallel split learning schemes. EPSL enhances model training efficiency by parallelising client-side computations and aggregating last-layer gradients, reducing server-side training and communication overhead.

Bio: Yue Gao is a Chair Professor at the School of Computer Science and Dean of the Institute of Space Internet at Fudan University, China. He is a Fellow of the IEEE, the IET and CIC. He received his MSc and PhD from the Queen Mary University of London (QMUL) U.K. in 2003 and 2007. He has worked as a lecturer, senior lecturer, reader, and professor at QMUL and the University of Surrey. His research interests include satellite internet and AI-powered networks. He was a co-recipient of the EU Horizon Prize Award on Collaborative Spectrum Sharing in 2016 and elected an Engineering and Physical Sciences Research Council Fellow in 2017. He is a member of the Board of Governors and Distinguished Lecturer of the IEEE Vehicular Technology Society (VTS), Chair of the IEEE ComSoc Wireless Communication Technical Committee, and past Chair of the IEEE ComSoc Technical Committee on Cognitive Networks.