FAQ¶

How to crop a face ?¶

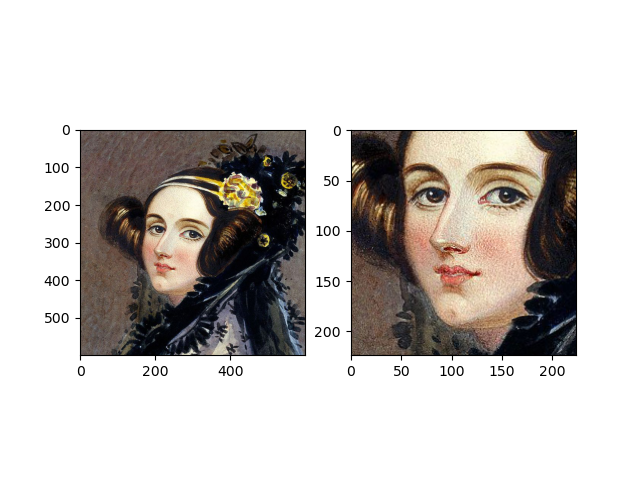

The recipe below helps you to set a face cropper based on eye positions.

# ---

# jupyter:

# jupytext:

# formats: ipynb,py:light

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.11.1

# kernelspec:

# display_name: Python 3

# language: python

# name: python3

# ---

# # How to crop a face

# +

import bob.bio.face

import bob.io.image

# Loading Ada's images

image = bob.io.base.load("./img/838_ada.jpg")

# Setting Ada's eyes

annotations = dict()

annotations["reye"] = (265, 203)

annotations["leye"] = (278, 294)

# Final cropped size

cropped_image_size = (224, 224)

# Defining where we want the eyes to be located after the crop

cropped_positions = {"leye": (65, 150), "reye": (65, 77)}

face_cropper = bob.bio.face.preprocessor.FaceCrop(

cropped_image_size=cropped_image_size,

cropped_positions=cropped_positions,

color_channel="rgb",

)

# Crops always a batch of images

cropped_image = face_cropper.transform([image], annotations=[annotations])

# +

# %matplotlib widget

import matplotlib.pyplot as plt

figure = plt.figure()

plt.subplot(121)

bob.io.image.imshow(image)

plt.subplot(122)

bob.io.image.imshow(cropped_image[0].astype("uint8"))

figure.show()

# -

How to choose the cropped positions ?¶

The ideal cropped positions are dependent on the specific application you are using the face cropper in. Some face embedding extractors work well on loosely cropped faces, while others require the face to be tightly cropped. We provide a few reasonable defaults that are used in our implemented baselines. They are accessible through a function as follows :

from bob.bio.face.utils import get_default_cropped_positions

mode = 'legacy'

cropped_image_size=(160, 160)

annotation_type='eyes-center'

cropped_positions = get_default_cropped_positions(mode, cropped_image_size, annotation_type)

There are currently three available modes :

legacyTight crop, used in non neural-net baselines such asgabor-graph,lgbphs. It is typically use with a 5:4 aspect ratio for thecropped_image_sizednnLoose crop, used for neural-net baselines such as the ArcFace or FaceNet models.padTight crop used in some PAD baselines

We present hereafter a visual example of those crops for the eyes-center annotation type.

import matplotlib.pyplot as plt

import bob.io.image

from bob.bio.face.preprocessor import FaceCrop

from bob.bio.face.utils import get_default_cropped_positions

src = bob.io.base.load("../img/cropping_example_source.png")

modes = ["legacy", "dnn", "pad"]

cropped_images = []

SIZE = 160

# Pick cropping mode

for mode in modes:

if mode == "legacy":

cropped_image_size = (SIZE, 4 * SIZE // 5)

else:

cropped_image_size = (SIZE, SIZE)

annotation_type = "eyes-center"

# Load default cropped positions

cropped_positions = get_default_cropped_positions(

mode, cropped_image_size, annotation_type

)

# Instanciate cropper and crop

cropper = FaceCrop(

cropped_image_size=cropped_image_size,

cropped_positions=cropped_positions,

fixed_positions={"reye": (480, 380), "leye": (480, 650)},

color_channel="rgb",

)

cropped_images.append(cropper.transform([src])[0].astype("uint8"))

# Visualize cropped images

fig, axes = plt.subplots(2, 2, figsize=(10, 10))

for i, (img, label) in enumerate(

zip([src] + cropped_images, ["original"] + modes)

):

ax = axes[i // 2, i % 2]

ax.axis("off")

ax.imshow(bob.io.image.to_matplotlib(img))

ax.set_title(label)