ICPR10

Oya Aran and Daniel Gatica-Perez

in the 20th Int'l Conf. on Pattern Recognition, Istanbul, Turkey, 2010

Download pdf file

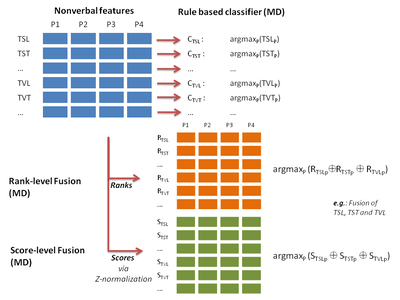

This work addresses the question of fusing audio and visual nonverbal

cues to estimate the most dominant person in small group interactions,

and shows that one can achieve higher accuracies by combining multimodal

cues. On top of the audio and visual nonverbal features, we present a

new set of audio-visual features that combines audio-visual information

at the feature extraction level. We also apply score and rank level

fusion techniques for multimodal fusion. For dominance estimation, we

use simple rule based estimators, which do not require labeled training

data.

On two dominance estimation tasks, (i.e. to estimate the most dominant

(MD) person, and to estimate the least dominant (LD) person), performed

on the DOME dataset,

our experiments show that the visual information is necessary and the

highest accuracies can only be achieved by combination of audio and

visual cues.