Camera Calibration¶

Camera calibration consists in estimating the parameters of a camera, using a set of images of a pattern from the

camera. The calibration procedure implemented in calibration.py uses capture files containing synchronous images of

several cameras to first estimate the intrinsic, then the extrinsic, parameters of the cameras. The script mostly wraps

opencv’s algorithms to use with bob.io.stream data files, therefore it is higly recommended to read opencv’s

documentation before using this script.

Camera Parameters¶

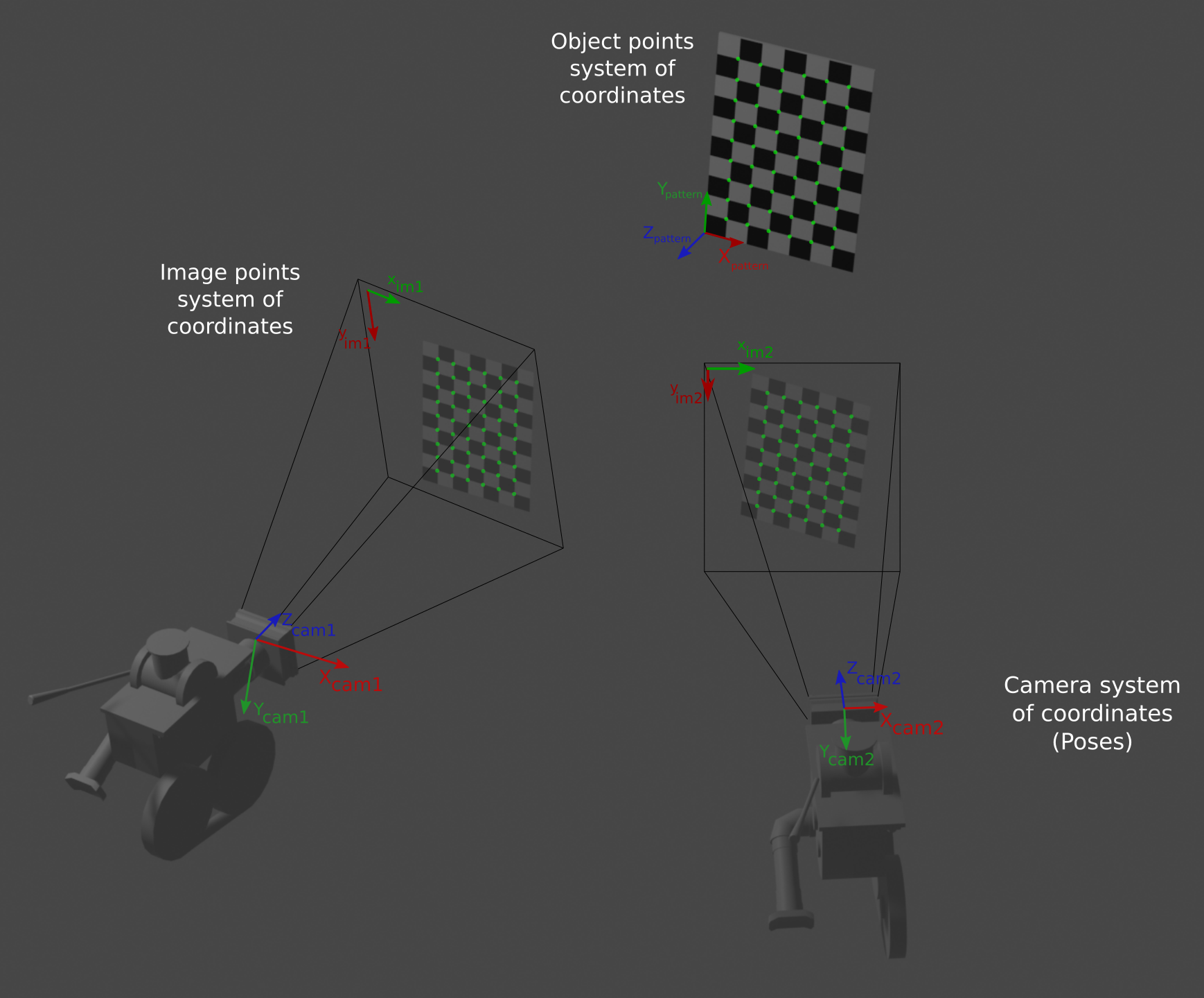

The intrinsic parameters of a camera are the paramters that do not depend on the camera’s environment. There are composed of the camera matrix - which describes the transformation between a 3D point in the world coordinate system, to a 2D pixel in an image plane, assuming a distortion free projection - and the distortion coefficients of a camera. Opencv’s camera and distortion model is explained here.

The extrinsic paramters are the rotation and translation of the camera representing the change of referential from the world coordinate to the camera coordinate system. Usually, the position and orientation of one camera, the reference, is used as the world coordinate system. In that case, the extrinsic parameters of the other cameras encode the relative position and rotation of these camera with respect to the reference, which allows to use stereo processing.

Intrinsic Parameters Estimation¶

To estimate the intrinsic parameters of a camera, an image of a pattern is captured. The pattern contains points which position and distance with respect to each other is known. The first type of patterns to be used were the checkerboard patterns: the points of interest of the patterns are the inner corners of the checkerboard. The points are alligned, and the distance between the points is given by the length of a checkerboard square. However, in an image of the pattern, the checkerboard corners are not aligned due to distortion, which allows to estimate the distortion coefficients of the camera’s lense. The number of pixels between corners maps to the distance in the real world (square length) and allows to estimate the camera matrix.

Opencv’s implements methods to detect checkerboard corners, which give precise measurements of the corners in the image, as is required for intrinsic parameters estimation. However these methods are not robust to occlusion: if a part of the pattern is hidden, the detection fails. To circumvent this problem, a Charuco pattern can be used: the Charuco pattern has unique (for a given pattern size) Aruco symbols placed in its white squares. The symbols allow to individually identify each corner, so the detection can work even when not all corners are visible.

See opencv’s documentation for more explanations on Charuco patterns and how to generate them: Detection of ArUco Markers, Detection of ChArUco Corners, Calibration with ArUco and ChArUco.

Extrinsic Parameters Estimation¶

Once the intrinsic parameters of a cameras are known, we are able to “rectify” the camera’s image, that is to correct the distortion and align the images. When this is done, we can tackle the problem of finding the position of the camera with respect to the real world coordinate system. Indeed, the mapping between a 3D point in the real world and the corresponding 2D point in the camra’s pixels is the projection parametrized by the camera’s pose. Given enough pair of (3D, 2D) points, the projection parameters can be estimated: this is the so-called Perspective-n-Point (PnP) problem. Opencv’s PnP solver documentation.

Note

The PnP algorithm works with a set of points which coordinates are known in the coordinate system of the camera’s

images (pixel position in the image) and in the world coordinate system. The world coordinate system origin and

orientation can be arbitrary chosen, the result of the PnP will be the camera Pose with respect to that choice. The

simplest choice is to place the coordinate system along the checkerboard axis: that way the 3rd coordinate of the

corners are (square_number_along_X * square_length, square_number_along_Y * square_length, 0).

A minimum of 4 points is needed for the PnP algorithm, however more points helps improve the precision and robustness to outliers.

However, we are not so much interested in knowning 1 camera’s pose, rather than to know the position of one camera with respect to another. In opencv, this is performed by the stereo calibrate function, which performs the following steps:

Receive a list of images for each camera, of the same pattern positions (for instance, the images were taken simultaneously with both cameras looking at the pattern).

For each pair of image from camera 1, camera 2, solve the PnP problem for each camera. Then from the pose of the 2 camera with respect to the pattern, compute the pose of one camera with respect to the other.

Average of the pose obtained for each image pair in previous step.

Fine tune the pose by optimizing the reprojection error of all points in both cameras. (Levenberg–Marquardt optimizer)

Note

The stereoCalibrate is also able to optimize the intrinsic parameters of the cameras. However, as the number of

parameters to optimize becomes big, the results may not be satisfactory. It is often preferable to first find the

intrinsic parameters of each camera, then fix them and optimize the extrinsic parameters.

calibration.py¶

The calibration script performs the following steps:

Find all the capture files in the input folder. The capture files are

hdf5files containing a dataset per camera, and one or more frames per dataset. If specified in the config, only 1 frame will be loaded.For each frames for each camera, run the detection to find the pattern points.

For each camera, using the frames where the pattern could be detected in the previous step, estimate the camera’s intrinsic parameters.

Finaly, the extrinsic parameters of all cameras with respect to a reference (passed as argument) are computed, using the frames where enough pattern points for both cameras were detected in the second step. The poses of the cameras are then displayed, and the calibration results are written to a

jsonfile.

The calibration script can be called with:

$ python calibration.py -c calibration_config.json -r ref_camera -o output.json -v

usage: calibration.py [-h] [-c CONFIG] [-i INTRINSICS_ONLY] -r REFERENCE

[-o OUTPUT_FILE] [-v]

Calibration script computing intrinsic and extrinsic parameters of cameras

from capture files. This script performs the following steps: 1. For all

capture files specified in the configuration json, detect the pattern points

(first on the image, if failed processes the image (grey-closing,

thresholding) and tries again) 2. Using the frames were the pattern points

were detected in step 1: estimate the intrinsic parameters for each stream

(camera) in the capture files. 3. Againg using the pattern points detected in

step 1, the extrinsic parameters of the cameras are estimated with respect to

the reference. 4. The camera poses are displayed, and the results are saved to

a json file.

optional arguments:

-h, --help show this help message and exit

-c CONFIG, --config CONFIG

An absolute path to the JSON file containing the

calibration configuration.

-i INTRINSICS_ONLY, --intrinsics-only INTRINSICS_ONLY

Compute intrinsics only.

-r REFERENCE, --reference REFERENCE

Stream name in the data files of camera to use as

reference.

-o OUTPUT_FILE, --output_file OUTPUT_FILE

Output calibration JSON file.

-v, --verbosity Output verbosity: -v output calibration result, -vv

output the dataframe, -vvv plots the target detection.

An example of a calibration configuration file is given bellow. The -r switch is used to specify the camera to uses

as the reference for extrinsic parameters estimation. The -v switch controls the verbosity of the script: setting

-vvv will for instance display the detected patterns points in each frame, to help debugging.

Calibration configuration example:

{

"capture_directory" : "/path/to/capture/folder/",

"stream_config" : "/path/to/data/streams/config.json",

"pattern_type": "charuco",

"pattern_rows": 10,

"pattern_columns": 7,

"square_size" : 25,

"marker_size" : 10.5,

"threshold" : 151,

"closing_size" : 2,

"intrinsics":

{

"fix_center_point" : false,

"fix_aspect_ratio" : false,

"fix_focal_length" : false,

"zero_tang_dist" : false,

"use_intrinsic_guess" : false,

"intrinsics_guess" :

{

"f" : [1200, 1200],

"center_point" : [540.5, 768],

"dist_coefs" : [0 ,0, 0, 0, 0]

}

},

"frames_list": {

"file_1.h5" : [0],

"file_2.h5" : [2],

},

"display":

{

"axe_x" : [-60, 60],

"axe_y" : [-60, 60],

"axe_z" : [-60, 60]

}

}

capture_directory : Path to the folder containing the capture files.stream_config : Path to the configuration files describing the streams in the capture files. (stream

configuration files from bob.io.stream)pattern_type : Type of pattern to detect in the captures. Only chessboard and charuco are currently

supported.pattern_rows : Number of squares in the Y axis in the target.pattern_columns : Number of squares in the X axis in the target.square_size : Length of a square in the pattern. This is used as a scale for the real world distances, for

instance the distance between the cameras in the extrinsic parameters will have the same unit as the square size.marker_size : Size of a marker in a charuco pattern.threshold : Threshold to use for image processing (Adaptative Thresholding) to help with the pattern detection.closing_size : Size of the grey closing for image processing to help with the pattern detection.intrinsics : Regroups the parameters of the algorithm used for intrinsic parameters estimation, that is the

parameters of opencv's calibrateCameraCharuco or calibrateCamera functions.fix_center_point : The principal point is not changed during the global optimization. It stays at the center or

at the location specified in the intrinsic guess if the guess is used.fix_aspect_ratio : The functions consider only fy as a free parameter. The ratio fx/fy stays the same as in the

input cameraMatrix. (fx, fy: focal lengths in the camera matrix).fix_focal_length : Fix fx and fy (focal lengths in camera matrix).zero_tang_dist : Tangential distortion coefficients are fixed to 0.use_intrinsic_guess : Use the intrinsic_guess as a starting point for the algorithm.intrinsics_guess : Starting point for the intrinsic paramters values.frames_list : Dictionary which keys are the capture files, and values are a list of frames to use in this file.

Only the files listed here will be used for the calibration.display : Axis limits when displaying the camera poses.Calibration API¶

Calibration script computing intrinsic and extrinsic parameters of cameras from capture files.

This script performs the following steps: 1. For all capture files specified in the configuration json, detect the pattern points (first on the image, if failed processes the image (grey-closing, thresholding) and tries again) 2. Using the frames were the pattern points were detected in step 1: estimate the intrinsic parameters for each stream (camera) in the capture files. 3. Againg using the pattern points detected in step 1, the extrinsic parameters of the cameras are estimated with respect to the reference. 4. The camera poses are displayed, and the results are saved to a json file.

- bob.ip.stereo.calibration.preprocess_image(image, closing_size=15, threshold=201, verbosity=None)[source]¶

Image processing to improve pattern detection for calibration.

Performs grey closing and adaptative threshold to enhance pattern visibility in calibration image.

- Parameters

image (

numpy.ndarray) – Calibration image. (uint16)closing_size (int) – Grey closing size, by default 15.

threshold (int) – Adaptative threshold passed to opencv adaptativeThreshold, by default 201.

- Returns

image – Processed image. (uint8)

- Return type

- bob.ip.stereo.calibration.detect_chessboard_corners(image, pattern_size, verbosity)[source]¶

Detect the chessboard pattern corners in a calibration image.

This function returns the coordinates of the corners in between the chessboard squares, in the coordinate system of the image pixels. With a chessboard pattern, either all corners are found, or the detection fails and None is returned.

- Parameters

image (

numpy.ndarray) – Calibration image. (uint8)pattern_size (tuple(int, int)) – Number of rows, columns in the pattern. These are the number of corners in between the board squares, so it is 1 less than the actual number of squares in a row/column.

verbosity (int) – If verbosity is strictly superior to 2, the detected chessboard will be plotted on the image.

- Returns

im_points – Array of the detected corner coordinates in the image.

- Return type

- bob.ip.stereo.calibration.detect_charuco_corners(image, charuco_board, verbosity)[source]¶

Detects charuco patterns corners in a calibration image.

This function returns the coordinates of the corners in between the charuco board squares, in the coordinate system of the image pixels. The charuco detection method is able to detect individual charuco symbol in the pattern, therefore the detection doesn’t necessarilly return a subset of the points if not all pattern symbol are detected. In order to know which symbol was detected, the function also returns the identifiers of the detected points.

Note: This function detects the charuco pattern inside the board squares, therefore the pattern size of the board (#rows, #cols) corresponds to the number of squares, not the number of corners in between squares.

- Parameters

image (

numpy.ndarray) – Calibration image (grayscale, uint8)charuco_board (

cv2.aruco_CharucoBoard) – Dictionary like structure defining the charuco patterns.verbosity (int) – If verbosity is strictly superior to 2, the detected pattersn will be plotted on the image.

- Returns

im_points (

numpy.ndarray) – Array of the detected corners coordinates in the image. Shape: (#detected_pattern, 1, 2)ids (

numpy.ndarray) – Identifiers of the detected patterns. Shape: (#detected_pattern, 1)

- bob.ip.stereo.calibration.detect_patterns(directory_path, data_config_path, pattern_type, pattern_size, params, charuco_board=None, verbosity=0)[source]¶

Detects chessboard or charuco patterns in the capture files.

For all files in the calibration parameters (json loaded in params), this function detects the pattern and returns the pattern points coordinates for each stream in the files. The results (image points and IDs for charuco) are stored in a DataFrame. This function also returns a dictionary with the image shape per camera, which is used to initialize the camera intrinsic matrices.

If the pattern is a chessboard, the same number of corners are found for all detected pattern. For charuco board, the detection can find a subset of the symbol present on the pattern, so the number not always the same. The ID of the detected symbol is then required to estimate the camera parameters.

The pattern points dataframe has the following structure:

cameraXXX cameraYYY cameraZZZ

fileAA:frameAA ptr_pts ptr_pts ptr_pts fileBB:frameBB ptr_pts ptr_pts ptr_pts fileCC:frameCC ptr_pts ptr_pts ptr_pts

Notes

Color images (in bob’s format) are first converted to opencv’s grayscale format

The detection is first run on the raw images. If it fails, it is tried on the pre-processed images.

- Parameters

directory_path (str) – Path to the directory containing the calibration data.

data_config_path (str) – Path to the json file describing the data (names, shape, …).

pattern_type (str) – “charuco” or “chessboard”: Type of pattern to detect.

pattern_size (

tupleof int) – Number of rows, number of columns in the pattern.params (dict) – Calibration parameters (image processing parameters, etc…)

charuco_board (

cv2.aruco_CharucoBoard) – Dictionary like structure defining the charuco pattern, by default None. Required if pattern_type is “charuco”.verbosity (int) – Verbosity level, higher for more output, by default 0.

- Returns

pattern_points (

pandas.DataFrame) – Dataframe containing the detected patterns points in the data.image_size (dict) – Shape of the image for each stream in the data, which is required to initialize the camera parameters estimations.

- Raises

ValueError – If the charuco board is not specified when the pattern_type is “charuco”

ValueError – If the streams in the data files do not have the same number of frames.

ValueError – If the specified list of frames length doesn’t match the number of calibration files .

ValueError – If the specified pattern type is not supported. (Only “chessboard” and “charuco” supported.)

- bob.ip.stereo.calibration.create_3Dpoints(pattern_size, square_size)[source]¶

Returns the 3D coordinates of a board corners, in the coordinate system of the calibration pattern.

With a pattern size (X,Y) or (rows, cols), the order of the corners iterate along X first, before Y. The 3D object points are created as that: (0,0,0), …, (X-1,0,0), (0,1,0), … , (X-1,Y-1,0). The 3rd coordinates is always 0, since all the points are in the plane of the board. Warning: for a charuco board with a pattern size (X,Y) is not equivalent a pattern (Y,X) rotated of +/- 90 degrees, but has different aruco markers on its board.

The coordinates are then scaled by the size of square in the pattern, to use metric distances. (This scale is reported to the translaltion distance between the cameras in the extrinsinc parameters estimation)

- Parameters

pattern_size (

tupleof int) – Number of rows, number of columns in the pattern. These are the number of corners in between the pattern squares, which is 1 less than the number of squares.square_size (float) – Width (or height) of a square in the pattern. The unit of this distance (eg, mm) will be the unit of distance in the calibration results.

- Returns

object_points – Coordinates of the pattern corners, with respect to the board.

- Return type

- bob.ip.stereo.calibration.get_valid_frames_for_intrinsic_calibration(detection_df, camera, pattern_type)[source]¶

Filters the detection df to keep only the frames that allow to estimate the intrinsic parameters of camera.

A frame in the calibration file has images for multiple streams. However, it is possible that the pattern was not detected for a stream in a frame. This function filters out the frames for which the pattern was not detected for the camera stream, returning only valid frames for the intrinsic parameter estimation of camera.

- Parameters

detection_df (

pandas.DataFrame) – DataFrame to filter, containing the detected points coordinates (and ID if charuco pattern) for all frames in all calibration files.camera (str) – Camera which intrinsic parameters will be estimated.

pattern_type (str) – “chessbord” or “charuco”: type of pattern.

- Returns

detection_df – Filtered dataframe with only valid frames for intrinsic parameter estimation of camera.

- Return type

- bob.ip.stereo.calibration.format_calibration_points_from_df(df, object_points, pattern_type)[source]¶

Format the detected pattern points in df and objects points in appropriate format for parameter estimation.

This is a utility function to take the image points in df, the corresponding 3D points in object_points and output them in the format required by opencv’s camera parameters estimation functions. df contains the image points (calibration points in the coordinate system of the image pixels), and object_points the pattern points in the coordinate system of the pattern. If a chessboard pattern is used, this function simply return the points as separate arrays. If a charuco board is used, the function also selects the object points corresponding to the detected image points.

- Parameters

df (pandas.DataFrame) – Dataframe containing the detected image points to use for calibration of a camera. Index is the capture filename and frame, values are the image points coordinates. (if a charuco pattern is used), the values a tuple of the images points ids and coordinates

object_points (numpy.ndarray) – 3D coordinates of the pattern points, in the coordinate system of the pattern. shape: (#points, 3)

pattern_type (str) – “chessboard” or “charuco”. Type of the pattern used for calibration.

- Returns

im_pts (: list) – Images points list. The length of the list is the number of capture files. Each element in the list is an array of detected image points for this file. shape: (#points, 1, 2)

obj_pts (list) – Object points list. The object points are the coordinates, in the coordinate system of the pattern, of the detected image points.

ids (list) – list of the points ids. If chessboard, the list is empty (the points don’t have an id).

- bob.ip.stereo.calibration.compute_intrinsics(im_pts, image_size, params, obj_pts=None, ids=None, charuco_board=None)[source]¶

Estimates intrinsic parameters of a camera

This function is basically a wrapper of opencv’s calibration function calibrateCameraCharuco or calibrateCamera. For more details on the format or meaning of the return values, please consult opencv’s documentation, eg: https://docs.opencv.org/4.5.0/d9/d0c/group__calib3d.html#ga3207604e4b1a1758aa66acb6ed5aa65d https://docs.opencv.org/4.5.0/d9/d6a/group__aruco.html#ga54cf81c2e39119a84101258338aa7383

- Parameters

im_pts (list) – Coordinates of the detected pattern corners, in the image pixels coordinate system. Length of the list is the number of calibration capture files. Each element is an array of image points coordinates (shape: (#points, 1, 2))

image_size (dict) – Shape of the images of the camera which parameters are computed.

params (dict) – Parameters for the intrisinc parameters estimation algorithms. These are the parameters in the “intrinsics” field of the config passed to this script.

obj_pts (list) – List of detected pattern points, in the coordinate system of the pattern, by default None. This is required if the pattern used was a chessboard pattern, but is not necessary for charuco patterns: the charuco calibration function builds the object points coordinates using the detected points ids (For a given size, a pattern always has the same points in the same place).

ids (list) – Ids of the image points, by default None. This is only required with a charuco pattern.

charuco_board (

cv2.aruco_CharucoBoard) – Dictionary like structure defining the charuco pattern, by default None. Required if pattern_type is “charuco”.

- Returns

reprojection_error (float) – Reprojection error

cam_mat (

numpy.ndarray) –- opencv 3x3 camera matrix: [[fx, 0, cx]]

[0, fy, cy] [0, 0, 1 ]]

dist_coefs (

numpy.ndarray) – Opencv’s distortion coefficients (length depends on the pattern type and estimation algorithm parameters)

- bob.ip.stereo.calibration.get_valid_frames_for_extrinsic_calibration(detection_df, camera_1, camera_2, pattern_type)[source]¶

Select the frames in which enough pattern points were detected to estimate extrinsic parameters between 2 cameras

If a chessboard pattern is used, the detection either find all points, or none, so it just selects frames where the detection was successful for both camera. For charuco pattern, the detection can detect a subset of the pattern points. In this case, selects the frames wit enough matching points (ie with id) detected in both cameras.

- Parameters

detection_df (

pandas.DataFrame) – DataFrame to filter, containing the detected points coordinates (and ID if charuco pattern) for all frames in all calibration files.camera_1 (str) – First camera which extrinsic parameters will be estimated.

camera_2 (str) – Second camera which extrinsic parameters will be estimated.

pattern_type (str) – “chessboard” or “charuco”: type of pattern.

- Returns

detection_df – Filtered dataframe with only valid frames for extrinsic parameter estimation between camera_1 and camera_2.

- Return type

- bob.ip.stereo.calibration.compute_relative_extrinsics(obj_pts, im_pts1, cam_m1, dist_1, im_pts2, cam_m2, dist_2, image_size=None)[source]¶

Compute extrinsic parameters of camera 1 with respect to camera 2.

This function is basically a wrapper around opencv’s stereoCalibrate function. For more information on the output values and signification, please refer to opencv’s documentation, eg: https://docs.opencv.org/4.5.0/d9/d0c/group__calib3d.html#ga91018d80e2a93ade37539f01e6f07de5

- Parameters

obj_pts (list) – Object points list: Coordinates of the detected pattern points in the coordinate system of the pattern

im_pts1 (list) – Image points of camera 1: the coordinates of the pattern points in the coordinate system of the camera 1 pixels. Each element of the list is a numpy array with shape (#points, 1, 2)

cam_m1 (

numpy.ndarray) – Camera matrix of camera 1.dist_1 (

numpy.ndarray) – Distortion coefficients of camera 1.im_pts2 (

numpy.ndarray) – Image points of camera 2: the coordinates of the pattern points in the coordinate system of the camera 2 pixels. Each element of the list is a numpy array with shape (#points, 1, 2)cam_m2 (

numpy.ndarray) – Camera matrix of camera 2.dist_2 (

numpy.ndarray) – Distortion coefficients of camera 2.image_size (tuple) – Shaped of the image used only to initialize the camera intrinsic matrices, by default None.

- Returns

reprojection_error (float) – Reprojection error

R (

numpy.ndarray) – Relative rotation matrix of camera 1 with respect to camera 2. shape: (3, 3)T (

numpy.ndarray) – Relative translation of camera 1 with respect to camera 2. shape: (3, 1)