Algorithms¶

Algorithms are user-defined piece of software that run within the blocks of a toolchain. An algorithm can read data on the input(s) of the block and write processed data on its output(s) (We refer to the inputs and outputs collectively as endpoints.). They are, hence, key components for scientific experiments, since they formally describe how to transform raw data into higher level concept such as classes.

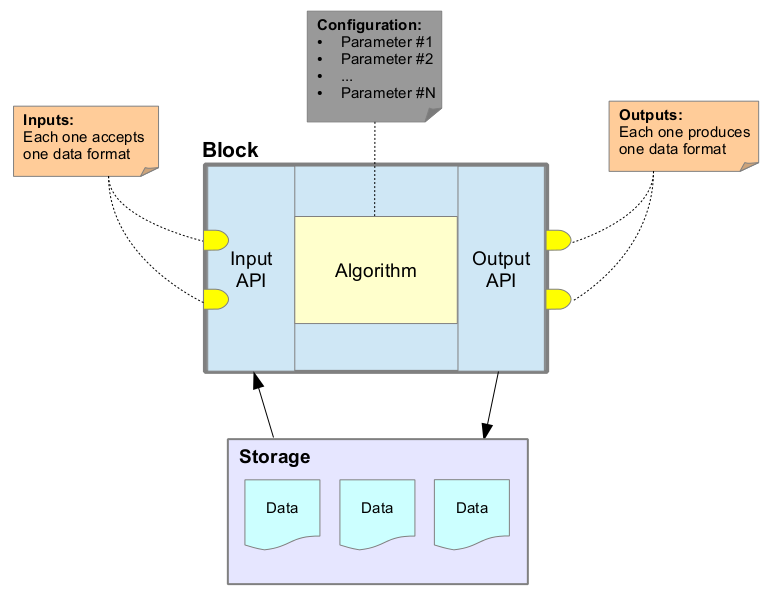

An algorithm lies at the core of each processing block and may be subject to parametrization. Inputs and outputs of an algorithm have well-defined data formats. The format of the data on each input and output of the block is defined at a higher-level in BEAT framework. It is expected that the implementation of the algorithm respects the format of each endpoint that was declared before.

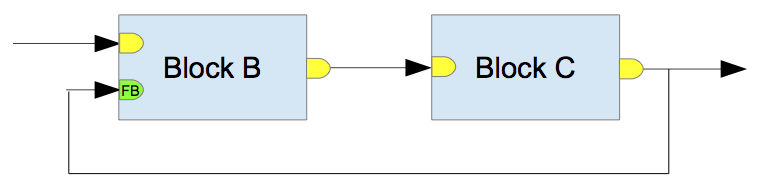

Fig. 1 displays the relationship between a processing block and its algorithm.

Fig. 1 Relationship between a processing block and its algorithm¶

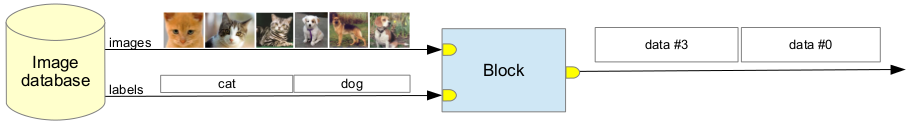

Typically, an algorithm will process data units received at the input endpoints, and push the relevant results to the output endpoint. Each algorithm must have at least one input and at least one output. The links in a toolchain connect the output of one block to the input of another effectively connecting algorithms together, thus determining the information-flow through the toolchain.

Blocks at the beginning of the toolchain are typically connected to datasets, and blocks at the end of a toolchain are connected to analyzers (special algorithms with no output). BEAT is responsible for delivering inputs from the desired datasets into the toolchain and through your algorithms. This drives the synchronization of information-flow through the toolchain. Flow synchronization is determined by data units produced from a dataset and injected into the toolchain.

Note

Naming Convention

Algorithms are named using three values joined by a / (slash) operator:

username: indicates the author of the algorithm

name: indicates the name of the algorithm

version: indicates the version (integer starting from

1) of the algorithm

Each tuple of these three components defines a unique algorithm name inside the BEAT ecosystem.

Algorithm types¶

The current version of BEAT framework has two algorithm type which are different in the way they handle data samples. These algorithms are the following:

Sequential

Autonomous

In the previous versions of BEAT only one type of algorithm (referred to as v1 algorithm) was implemented. The sequential algorithm type is the direct successor of the v1 algorithm. For migration information, see Migrating from API v1 to API v2.

The platform now also provides the concept of soft loop. The soft loop allows the implementation of supervised processing within a macro block.

Sequential¶

The sequential algorithm is data-driven; algorithm is typically provided one data sample at a time and must immediately produce some output data.

Autonomous¶

The autonomous algorithm as its name suggest is responsible for loading the data samples it needs in order to do its work. It’s also responsible for writing the appropriate amount of data on its outputs.

Furthermore, the way the algorithm handle the data is highly configurable and covers a huge range of possible scenarios.

Loop¶

A loop is composed of three elements:

An processor algorithm

An evaluator algorithm

A LoopChannel

The two algorithms work in pair using the LoopChannel to communicate. The processor algorithm is responsible for applying some transformation or analysis on a set of data and then send the result to evaluator for validation. The role of the evaluator is to provide a feedback to the processor that will either continue processing the same block of data or go on with the next until all data is exhausted. The output writing of the evaluator is synchronized with the output writing of the processor.

Sequential versions have also the reading part that is synchronized so that the evaluator can read data at the same pace as the processor.

The two algorithms are available in both sequential and autonomous form. However there are only three valid combinations:

Processor |

Evaluator |

|---|---|

Autonomous |

Autonomous |

Sequential |

Sequential |

Sequential |

Autonomous |

Definition of an Algorithm¶

An algorithm is defined by two distinct components:

a JSON object with several fields, specifying the inputs, the outputs, the parameters and additional information such as the language in which it is implemented.

source code (and/or [later] binary code) describing how to transform the input data.

JSON Declaration¶

A JSON declaration of an algorithm consists of several fields. For example, the following declaration is the one of an algorithm implementing probabilistic component analysis (PCA):

{

"schema_version": 2,

"language": "python",

"api_version": 2,

"type": "sequential",

"splittable": false,

"groups": [

{

"inputs": {

"image": {

"type": "system/array_2d_uint8/1"

}

},

"outputs": {

"subspace": {

"type": "tutorial/linear_machine/1"

}

}

}

],

"parameters": {

"number-of-components": {

"default": 5,

"type": "uint32"

}

},

"description": "Principal Component Analysis (PCA)"

}

Here are the description for each of the fields in the example above:

schema_version: specifies which schema version must be used to validate the file content.

api_version: specifies the version of the API implemented by the algorithm.

type: specifies the type of the algorithm. Depending on that, the execution model will change.

language: specifies the language in which the algorithm is implemented.

splittable: indicates, whether the algorithm can be parallelized into chunks or not.

parameters: lists the parameters of the algorithm, describing both default values and their types.

groups: gives information about the inputs and outputs of the algorithm. They are provided into a list of dictionary, each element in this list being associated to a database channel. The group, which contains outputs, is the synchronization channel. By default, a loop is automatically performed by the BEAT framework on the synchronization channel, and user-code must not loop on this group. In contrast, it is the responsibility of the user to load data from the other groups. This is described in more details in the following subsections.

description: is optional and gives a short description of the algorithm.

Note

The graphical interface of BEAT provides user-friendly editors to configure the main components of the system (for example: algorithms, data formats, etc.), which simplifies their JSON declaration definition. One needs only to declare an algorithm using the described specifications when not using this graphical interface.

Analyzer¶

At the end of the processing workflow of an experiment, there is a special kind of algorithm, which does not yield any output but instead it produces results. These algorithms are called analyzers.

Results of an experiment are reported back to the user. Data privacy is very important in the BEAT framework and therefore only a limited number of data formats can be employed as results in an analyzer, such as boolean, integers, floating point values, strings (of limited size), as well as plots (such as scatter or bar plots).

For example, the following declaration is the one of a simple analyzer, which generates an ROC curve as well as few other metrics.

{

"language": "python",

"groups": [

{

"inputs": {

"scores": {

"type": "tutorial/probe_scores/1"

}

}

}

],

"results": {

"far": {

"type": "float32",

"display": true

},

"roc": {

"type": "plot/scatter/1",

"display": false

},

"number_of_positives": {

"type": "int32",

"display": false

},

"frr": {

"type": "float32",

"display": true

},

"eer": {

"type": "float32",

"display": true

},

"threshold": {

"type": "float32",

"display": false

},

"number_of_negatives": {

"type": "int32",

"display": false

}

}

}

Source code¶

The BEAT framework has been designed to support algorithms written in different programming languages. However, for each language, a corresponding back-end needs to be implemented, which is in charge of connecting the inputs and outputs to the algorithm and running its code as expected. In this section, we describe the implementation of algorithms in the Python and C++ programming language.

BEAT treats algorithms as objects that are derived from the class

Algorithm when using Python or in case of C++, they should be derived from

IAlgorithmLagacy, IAlgorithmSequential, or IAlgorithmAutonomous

depending of the algorithm type. To define a new algorithm,

at least one method must be implemented:

process(): the method that actually processes input and produces outputs.

The code example below illustrates the implementation of an algorithm (in Python):

1class Algorithm:

2

3 def process(self, inputs, data_loaders, outputs):

4 # here, you read inputs, process and write results to outputs

Here is the equivalent example for a sequential algorithm in C++:

1 class Algorithm: public IAlgorithmSequential

2 {

3 public:

4 bool process(const InputList& inputs, const DataloaderList& data_load_list, const OutputList& outputs) override

5 {

6 // here, you read inputs, process and write results to outputs

7 }

8 };

Examples¶

To implement a new algorithm, one must write a class following a few conventions. In the following, examples of such classes are provided.

Simple sequential algorithm (no parametrization)¶

At the very minimum, an algorithm class must look like this:

class Algorithm:

def process(self, inputs, data_loaders, outputs):

# Read data from inputs, compute something, and write the result

# of the computation on outputs

...

return True

The class must be called Algorithm and must have a method called

process(), that takes as parameters a list of inputs (see section

Input list), a list of data loader (see section

DataLoader list) and a list of outputs

(see section Output list). This method must

return True if everything went correctly, and False if an error

occurred.

The platform will call this method once per block of data available on the synchronized inputs of the block.

Simple autonomous algorithm (no parametrization)¶

At the very minimum, an algorithm class must look like this:

class Algorithm:

def process(self, data_loaders, outputs):

# Read data from data_loaders, compute something, and write the

# result of the computation on outputs

...

return True

The class must be called Algorithm and must have a method called

process(), that takes as parameters a list of data loader (see section

Data loaders) and a list of outputs (see

section Output list). This method must

return True if everything went correctly, and False if an error

occurred.

The platform will call this method only once as it is its responsibility to load the appropriate amount of data and process it.

Simple autonomous processor algorithm (no parametrization)¶

At the very minimum, a processor algorithm class must look like this:

class Algorithm:

def process(self, data_loaders, outputs, loop_channel):

# Read data from data_loaders, compute something, and validates the

# hypothesis

...

is_valid, feedback = loop_channel.validate({"value": np.float64(some_value)})

# check is_valid and continue appropriately and write the result

# of the computation on outputs

...

return True

The class must be called Algorithm and must have a method called

process(), that takes as parameters a list of inputs (see section

Input list), a list of data loader (see section

DataLoader list), a list of outputs

(see section Output list) and a loop chanel

(see section Soft loop communication) . This method must

return True if everything went correctly, and False if an error

occurred.

The platform will call this method once per block of data available on the synchronized inputs of the block.

Simple autonomous evaluator algorithm (no parametrization)¶

At the very minimum, a processor algorithm class must look like this:

class Algorithm:

def validate(self, hypothesis):

# compute if hypothesis makes sense and returns a tuple with a

# boolean value and some feendback

return (result, {"value": np.float32(delta)})

def write(self, outputs, processor_output_name, end_data_index):

# write something on its output, it is called in sync with processor

# algorithm write

outputs["out"].write({"value": np.int32(self.output)}, end_data_index)

The class must be called Algorithm and must have a method called

validate(), that takes as parameter a dataformat that will contain the

hypothesis that needs validation. The function must return a tuple made of a

boolean value and feedback value that will be used by the processor to determine

whether it should continue processing the current data or move further.

Parameterizable algorithm¶

The following is valid for all types of algorithms

To implement a parameterizable algorithm, two things must be added to the class:

(1) a field in the JSON declaration of the algorithm containing their default

values as well as the type of the parameters, and (2) a method called

setup(), that takes one argument, a map containing the parameters of the

algorithm.

{

...

"parameters": {

"threshold": {

"default": 0.5,

"type": "float32"

}

},

...

}

class Algorithm:

def setup(self, parameters):

# Retrieve the value of the parameters

self.threshold = parameters['threshold']

return True

def process(self, inputs, data_loaders, outputs):

# Read data from inputs, compute something, and write the result

# of the computation on outputs

...

return True

When retrieving the value of the parameters, one must not assume that a value

was provided for each parameter. This is why we may use a try: … except: …

construct in the setup() method.

Preparation of an algorithm¶

The following is valid for all types of algorithms

Often algorithms need to compute some values or retrieve some data prior to applying their mathematical logic.

This is possible using the prepare method.

class Algorithm:

def prepare(self, data_loaders):

# Retrieve and prepare some data.

data_loader = data_loaders.loaderOf('in2')

(data, _, _) = data_loader[0]

self.offset = data['in2'].value

return True

def process(self, inputs, data_loaders, outputs):

# Read data from inputs, compute something, and write the result

# of the computation on outputs

...

return True

Data Synchronization in Sequential Algorithms¶

One particularity of the BEAT framework is how the data-flow through a

given toolchain is synchronized. The framework is responsible for extracting

data units (images, speech-segments, videos, etc.) from the database and

presenting them to the input endpoints of certain blocks, as specified in the

toolchain. Each time a new data unit is presented to the input of a block can

be thought of as an individual time-unit. The algorithm implemented in a block

is responsible for the synchronization between its inputs and its output. In

other words, every time a data unit is produced by a dataset on an experiment,

the process() method of your algorithm is called to act upon it.

An algorithm may have one of two kinds of sychronicities: one-to-one, and many-to-one. These are discussed in detail in separate sections below.

One-to-one synchronization¶

Here, the algorithm generates one output for every input entity (e.g., image, video, speech-file). For example, an image-based feature-extraction algorithm would typically output one set of features every time it is called with a new input image. A schematic diagram of one-to-one sychronization for an algorithm is shown in the figure below:

At the configuration shown in this figure, the algorithm-block has two endpoints: one input, and one output. The inputs and outputs and the block are synchronized together (notice the color information). Each red box represents one input unit (e.g., an image, or a video), that is fed to the input interface of the block. Corresponding to each input received, the block produces one output unit, shown as a blue box in the figure.

An example code showing how to implement an algorithm in this configuration is shown below:

1 class Algorithm:

2

3 def process(self, inputs, data_loaders, outputs):

4

5 # to read the field "value" on the "in" input, use "data"

6 # a possible declaration of "user/format/1" would be:

7 # {

8 # "value": ...

9 # }

10 value = inputs['in'].data.value

11

12 # do your processing and create the "output" value

13 output = magical_processing(value)

14

15 # to write "output" into the relevant endpoint use "write"

16 # a possible declaration of "user/other/1" would be:

17 # {

18 # "value": ...

19 # }

20 outputs['out'].write({'value': output})

21

22 # No error occurred, so return True

23 return True

1 class Algorithm: public IAlgorithmSequential

2 {

3 public:

4 bool process(const InputList& inputs, const DataloaderList& data_load_list, const OutputList& outputs) override

5 {

6 // to read the field "value" on the "in" input, use "data"

7 // a possible declaration of "user/format/1" would be:

8 // {

9 // "value": ...

10 // }

11 auto value = inputs["in"]->data<user::format_1>()->value;

12

13 // do your processing and create the "output" value

14 auto output = magical_processing(value);

15

16 // to write "output" into the relevant endpoint use "write"

17 // a possible declaration of "user/other/1" would be:

18 // {

19 // "value": ...

20 // }

21 user::other_1 result;

22 result.value = output;

23

24 outputs["out"]->write(&result);

25

26 # No error occurred, so return true

27 return true;

28 }

29 };

In this example, the platform will call the user algorithm every time a new

input block with the format user/format/1 is available at the input. Notice

no for loops are necessary on the user code. The platform controls the

looping for you.

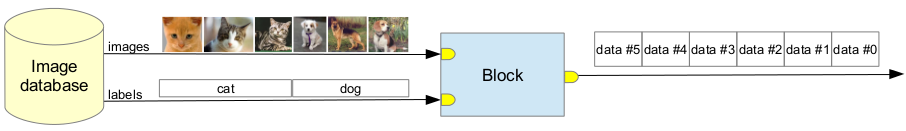

A more complex case of one-to-one sychronization is shown the following figure:

In such a configuration, the platform will ensure that each input unit at the

input-endpoint in is associated with the correct input unit at the

input-endpoint in2. For example, referring to the figure above, the items

at the input in could be images, at the items at the input in2 could be

labels, and the configuration depicted indicates that the first two input

images have the same label, say, l1, whereas the next two input images have

the same label, say, l2. The algorithm produces one output item at the

endpoint out, for each input object presented at endpoint in.

Example code implementing an algorithm processing data in this scenario is shown below:

1 class Algorithm:

2

3 def process(self, inputs, data_loaders, outputs):

4

5 i1 = inputs['in'].data.value

6 i2 = inputs['in2'].data.value

7

8 out = magical_processing(i1, i2)

9

10 outputs['out'].write({'value': out})

11

12 return True

1 class Algorithm: public IAlgorithmSequential

2 {

3 public:

4 bool process(const InputList& inputs, const DataloaderList& data_load_list, const OutputList& outputs) override

5 {

6 auto i1 = inputs["in"]->data<user::format_1>()->value;

7 auto i2 = inputs["in2"]->data<user::format_1>()->value;

8

9 auto out = magical_processing(i1, i2);

10

11 user::other_1 result;

12 result.value = out;

13

14 outputs["out"]->write(&result);

15

16 return true;

17 }

18 };

You should notice that we still don’t require any sort of for loops! BEAT synchronizes the inputs in and in2 so they are available to

your program as the dataset implementor defined.

Many-to-one synchronization¶

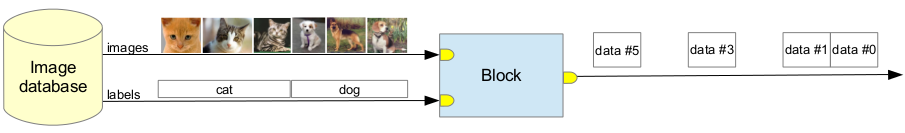

Here, the algorithm produces a single output after processing a batch of inputs. For example, the algorithm may produce a model for a dog after processing all input images for the dog class. A block diagram illustrating many-to-one synchronization is shown below:

Here the synchronization is driven by the endpoint in2. For each data unit

received at the input in2, the algorithm generates one output unit. Note

that, here, multiple units received at the input in are accumulated and

associated with a single unit received at in2. The user does not have to

handle the internal indexing. Producing output data at the right moment is

enough for BEAT to understand the output is synchronized with in2.

The example below illustrates how such an algorithm could be implemented:

1 class Algorithm:

2

3 def __init__(self):

4 self.objs = []

5

6 def process(self, inputs, data_loaders, outputs):

7 self.objs.append(inputs['in'].data.value) # accumulates

8

9 if not (inputs['in2'].hasMoreData()):

10 out = magical_processing(self.objs)

11 outputs['out'].write({'value': out})

12 self.objs = [] #reset accumulator for next label

13

14 return True

1 class Algorithm: public IAlgorithmSequential

2 {

3 public:

4 bool process(const InputList& inputs, const DataloaderList& data_load_list, const OutputList& outputs) override

5 {

6 objs.push_back(inputs["in"]->data<user::format_1>()->value); // accumulates

7

8 if !(inputs["in2"]->hasMoreData())

9 {

10 auto out = magical_processing(objs);

11

12 user::other_1 result;

13 result.value = out;

14

15 outputs["out"]->write(&result);

16

17 objs.clear(); // reset accumulator for next label

18 }

19

20 return true;

21 }

22

23 public:

24 std::vector<float> objs;

25 };

Here, the units received at the endpoint in are accumulated as long as the

hasMoreData() method attached to the input in2 returns True.

When hasMoreData() returns False, the corresponding label is read

from in2, and a result is produced at the endpoint out. After an output

unit has been produced, the internal accumulator for in is cleared, and the

algorithm starts accumulating a new set of objects for the next label.

Unsynchronized Operation¶

Not all inputs for a block need to be synchronized together. In the diagram

shown below, the block is synchronized with the inputs in and in2 (as indicated by

the green circle which matches the colour of the input lines connecting in and in2).

The output out is synchronized with the block (and as one can notice locking at the code

below, outputs signal after every in input). The input in3 is not

synchronized with the endpoints in, in2 and with the block. A processing block

which receives a previously calculated model and must score test samples is a

good example for this condition. In this case, the user is responsible for

reading out the contents of in3 explicitly.

In this case the algorithm will include an explicit loop to read the

unsynchronized input (in3).

1 class Algorithm:

2

3 def __init__(self):

4 self.models = []

5

6 def prepare(self, data_loaders):

7

8 # Loads the "model" data at the beginning

9 loader = data_loaders.loaderOf('in3')

10 for i in range(loader.count()):

11 view = loader.view('in3', i)

12 data, _, _ = view[0]

13 self.models.append(data['in3'].value)

14 return True

15

16

17 def process(self, inputs, data_loaders, outputs):

18 # N.B.: this will be called for every unit in `in'

19

20 # Processes the current input in `in' and `in2', apply the

21 # model/models

22 out = magical_processing(inputs['in'].data.value,

23 inputs['in2'].data.value,

24 self.models)

25

26 # Writes the output

27 outputs.write({'value': out})

28

29 return True

1 class Algorithm: public IAlgorithmSequential

2 {

3 public:

4 bool prepare(const beat::backend::cxx::DataLoaderList& data_load_list) override

5 {

6 auto loader = data_load_list["in3"];

7 for (int i = 0 ; i < loader->count() ; ++i) {

8 auto view = loader->view("in3", i);

9 std::map<std::string, beat::backend::cxx::Data *> data;

10 std::tie(data, std::ignore, std::ignore) = (*view)[0];

11 auto model = static_cast<user::model*>(data["in3"]);

12 models.append(*model);

13 }

14

15 return true;

16 }

17

18 bool process(const InputList& inputs, const DataloaderList& data_load_list, const OutputList& outputs) override

19 {

20 # N.B.: this will be called for every unit in `in'

21

22 // Processes the current input in `in' and `in2', apply the model/models

23 auto out = magical_processing(inputs["in"]->data<user::format_1>()->value,

24 inputs["in2"]->data<user::format_1>()->value,

25 models);

26

27 // Writes the output

28 user::other_1 result;

29 result.value = out;

30

31 outputs["out"]->write(&result);

32

33 return true;

34 }

35

36 public:

37 std::vector<user::model_1> models;

38 };

In the example above you have several inputs which are synchronized together, but unsynchronized with the block you’re writing your algorithm for. It may also happen that you have even more data inputs that are unsynchronized. In this case, using group for different set of inputs makes the code easier to read. .. it is safer to treat inputs using their group. For example:

Handling input data¶

Input list¶

An algorithm is given access to the list of the inputs of the processing block. This list can be used to access each input individually, either by their name (see section Input naming), their index or by iterating over the list:

# 'inputs' is the list of inputs of the processing block

print(inputs['labels'].data_format)

for index in range(0, inputs.length):

print(inputs[index].data_format)

for input in inputs:

print(input.data_format)

for input in inputs[0:2]:

print(input.data_format)

Additionally, the following method is usable on a list of inputs:

- InputList.hasMoreData()¶

Indicates if there is (at least) another block of data to process on some of the inputs

Input¶

Each input provides the following informations:

- Input.name¶

(string) Name of the input

- Input.data_format¶

(string) Data format accepted by the input

- Input.data_index¶

(integer) Index of the last block of data received on the input (See section Inputs synchronization)

- Input.data¶

(object) The last block of data received on the input

The structure of the data object is dependent of the data format assigned to

the input. Note that data can be None.

Input naming¶

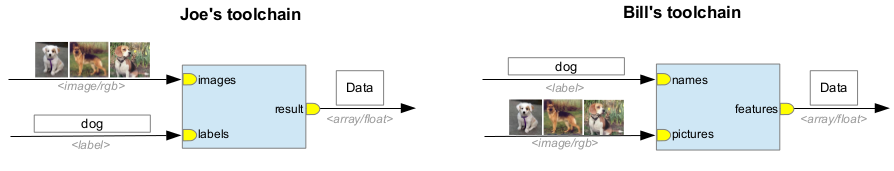

Each algorithm assign a name of its choice to each input (and output, see section Output naming). This mechanism ensures that algorithms are easily shareable between users.

For instance, in Fig. 2, two different users (Joe and Bill) are using two different toolchains. Both toolchains have one block with two entries and one output, with a similar set of data formats (image/rgb and label on the inputs, array/float on the output), although not in the same order. The two blocks use different algorithms, which both refers to their inputs and outputs using names of their choice

Nevertheless, Joe can choose to use Bill’s algorithm instead of his own one. When the algorithm to use is changed, BEAT will attempt to match each input with the names (and types) declared by the algorithm. In case of ambiguity, the user will be asked to manually resolve it.

In other words: the way the block is connected in the toolchain doesn’t force a naming scheme or a specific order of inputs to the algorithms used in that block. As long as the set of data types (on the inputs and outputs) is compatible for both the block and the algorithm, the algorithm can be used in the block.

Fig. 2 Different toolchains, but interchangeable algorithms¶

The name of the inputs are assigned in the JSON declaration of the algorithm, such as:

{

...

"groups": [

{

"inputs": {

"name1": {

"type": "data_format_1"

},

"name2": {

"type": "data_format_2"

}

}

}

],

...

}

Inputs synchronization¶

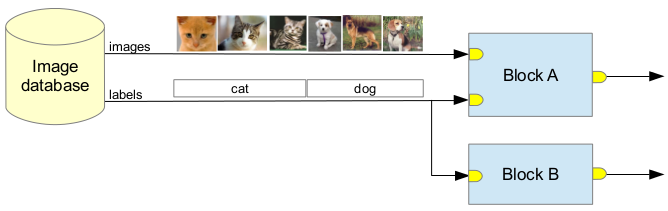

The data available on the different inputs from the synchronized channels are (of course) synchronized. Let’s consider the example toolchain on Fig. 3, where:

The image database provides two kind of data: some images and their associated labels

The block A receives both data via its inputs

The block B only receives the labels

Both algorithms are data-driven

The system will ask the block A to process 6 images, one by one. On the

second input, the algorithm will find the correct label for the current image.

The block B will only be asked to process 2 labels.

The algorithm can retrieve the index of the current block of data of each of

its input by looking at their data_index attribute. For simplicity, the

list of inputs has two attributes (current_data_index and

current_end_data_index) that indicates the data indexes currently used by

the synchronization mechanism of the platform.

Fig. 3 Synchronization example¶

Additional input methods for unsynchronized channels¶

Unsynchronized input channels of algorithms can be accessed at will, and algorithms can use it any way they want. To be able to perform their job, they have access to additional methods.

The following method is usable on a list of inputs:

- InputList.next()¶

Retrieve the next block of data on all the inputs in a synchronized manner

Let’s come back at the example toolchain on Fig. 3, and assume that block A uses an autonomous algorithm. To iterate over all the data on its inputs, the algorithm would do:

class Algorithm:

def process(self, inputs, data_loaders, outputs):

# Iterate over all the unsynchronized data

while inputs.hasMoreData():

inputs.next()

# Do something with inputs['images'].data and inputs['labels'].data

...

# At this point, there is no more data available on inputs['images'] and

# inputs['labels']

return True

The following methods are usable on an input, in cases where the algorithm

doesn’t care about the synchronization of some of its inputs:

- Input.hasMoreData()¶

Indicates if there is (at least) another block of data available on the input

- Input.next()¶

Retrieve the next block of data

Warning

Once this method has been called by an algorithm, the input is no more automatically synchronized with the other inputs of the block.

In the following example, the algorithm desynchronizes one of its inputs but keeps the others synchronized and iterate over all their data:

{

...

"groups": [

{

"inputs": {

"images": {

"type": "image/rgb"

},

"labels": {

"type": "label"

},

"desynchronized": {

"type": "number"

}

}

}

],

...

}

class Algorithm:

def process(self, inputs, data_loaders, utputs):

# Desynchronize the third input. From now on, inputs['desynchronized'].data

# and inputs['desynchronized'].data_index won't change

inputs['desynchronized'].next()

# Iterate over all the data on the inputs still synchronized

while inputs.hasMoreData():

inputs.next()

# Do something with inputs['images'].data and inputs['labels'].data

...

# At this point, there is no more data available on inputs['images'] and

# inputs['labels'], but there might be more on inputs['desynchronized']

return True

Feedback inputs¶

The Fig. 4 shows a toolchain containing a feedback loop. A special kind of input is needed in this scenario: a feedback input, that isn’t synchronized with the other inputs, and can be freely used by the algorithm.

Those feedback inputs aren’t yet implemented in the prototype of the platform. This will be addressed in a later version.

Fig. 4 Feedback loop¶

Data loaders¶

DataLoader list¶

An algorithm is given access to the list of data loaders of the processing block. This list can be used to access each data loader individually, either by their channel name (see Input naming), their index or by iterating over the list:

# 'data_loaders' is the list of data loaders of the processing block

# Retrieve a data loader by name

data_loader = data_loaders['labels']

# Retrieve a data loader by index

for index in range(0, len(data_loaders)):

data_loader = data_loaders[index]

# Iteration over all data loaders

for data_loader in data_loaders:

...

# Retrieve the data loader an input belongs to, by input name

data_loader = data_loaders.loaderOf('label')

DataLoader¶

Provides access to data from a group of inputs synchronized together.

See DataLoader.

Handling output data¶

Output list¶

An algorithm is given access to the list of the outputs of the processing block. This list can be used to access each output individually, either by their name (see section Output naming), their index or by iterating over the list:

# 'outputs' is the list of outputs of the processing block

print outputs['features'].data_format

for index in range(0, outputs.length):

outputs[index].write(...)

for output in outputs:

output.write(...)

for output in outputs[0:2]:

output.write(...)

Output¶

Each output provides the following informations:

- OutputList.name¶

(string) Name of the output

- OutputList.data_format¶

(string) Format of the data written on the output

And the following method:

- OutputList.write(data, end_data_index=None)¶

Write a block of data on the output

We’ll look at the usage of this method through some examples in the following sections.

Output naming¶

Like for its inputs, each algorithm assign a name of its choice to each output (see section Input naming for more details) by including them in the JSON declaration of the algorithm.

{

...

"groups": [

{

"inputs": {

...

},

"outputs": {

"name1": {

"type": "data_format1"

},

"name2": {

"type": "data_format2"

}

}

}

],

...

}

Example 1: Write one block of data for each received block of data¶

Fig. 5 Example 1: 6 images as input, 6 blocks of data produced¶

Consider the example toolchain on Fig. 5. We will implement a data-driven algorithm that will write one block of data on the output of the block for each image received on its inputs. This is the simplest case.

{

...

"groups": [

{

"inputs": {

"images": {

"type": "image/rgb"

},

"labels": {

"type": "label"

}

},

"outputs": {

"features": {

"type": "array/float"

}

}

}

],

...

}

class Algorithm:

def process(self, inputs, outputs):

# Compute something from inputs['images'].data and inputs['labels'].data

# and store the result in 'data'

data = ...

# Write our data block on the output

outputs['features'].write(data)

return True

The structure of the data object is dependent of the data format assigned

to the output.

Example 2: Skip some blocks of data¶

Fig. 6 Example 2: 6 images as input, 4 blocks of data produced, 2 blocks of data skipped¶

Consider the example toolchain on Fig. 6. This time, our algorithm will use a criterion to decide if it can perform its computation on an image or not, and tell the platform that, for a particular data index, no data is available.

{

...

"groups": [

{

"inputs": {

"images": {

"type": "image/rgb"

},

"labels": {

"type": "label"

}

},

"outputs": {

"features": {

"type": "array/float"

}

}

}

],

...

}

class Algorithm:

def process(self, inputs, data_loaders, outputs):

# Use a criterion on the image to determine if we can perform our

# computation on it or not

if can_compute(inputs['images'].data):

# Compute something from inputs['images'].data and inputs['labels'].data

# and store the result in 'data'

data = ...

# Write our data block on the output

outputs['features'].write(data)

else:

# Tell the platform that no data is available for this image

outputs['features'].write(None)

return True

def can_compute(self, image):

# Implementation of our criterion

...

return True # or False

Soft loop communication¶

The processor and evaluator algorithm components of the soft loop macro block communicate with each other using a LoopChannel object. This object defines the two dataformats that will be used to make the request and the answer that will transit through the loop channel. This class is only meant to be used by the algorithm implementer.

Migrating from API v1 to API v2¶

Algorithm that have been written using BEAT’s algorithm v1 can still be run under v2 execution model. They are now considered legacy algorithm and should be ported quickly to the API v2.

API v2 provides two different types of algorithms: - Sequential - Autonomous

The Sequential type follows the same code execution model as the v1 API, meaning that the process function is called once for each input item.

The Autonomous type allows the developer to load the input data at will therefor the process method will only be called once. This allows for example to optimize loading of data to the GPU memory for faster execution.

The straightforward migration path from v1 to v2 is to make a Sequential algorithm which will require only a few changes regarding the code.

API V1:

class Algorithm:

def setup(self, parameters):

self.sync = parameters['sync']

return True

def process(self, inputs, outputs):

if inputs[self.sync].isDataUnitDone():

outputs['out'].write({

'value': inputs['in1'].data.value + inputs['in2'].data.value,

})

return True

API V2 sequential:

class Algorithm:

def setup(self, parameters):

self.sync = parameters['sync']

return True

def process(self, inputs, data_loaders, outputs):

if inputs[self.sync].isDataUnitDone():

outputs['out'].write({

'value': inputs['in1'].data.value + inputs['in2'].data.value,

})

return True

API V2 automous:

class Algorithm:

def setup(self, parameters):

self.sync = parameters['sync']

return True

def process(self, data_loaders, outputs):

data_loader = data_loaders.loaderOf('in1')

for i in range(data_loader.count(self.sync)):

view = data_loader.view(self.sync, i)

(data, start, end) = view[view.count() - 1]

outputs['out'].write({

'value': data['in1'].value + data['in2'].value,

},

end

)

return True