Research

My research interests are in the connections between human cognition and deep learning. Specifically, understanding the connection between deep attention-based models and Bayesian nonparametrics for Natural Language Processing. You can find my papers on Google Scholar, Arxiv and DeepAI.

2023

|

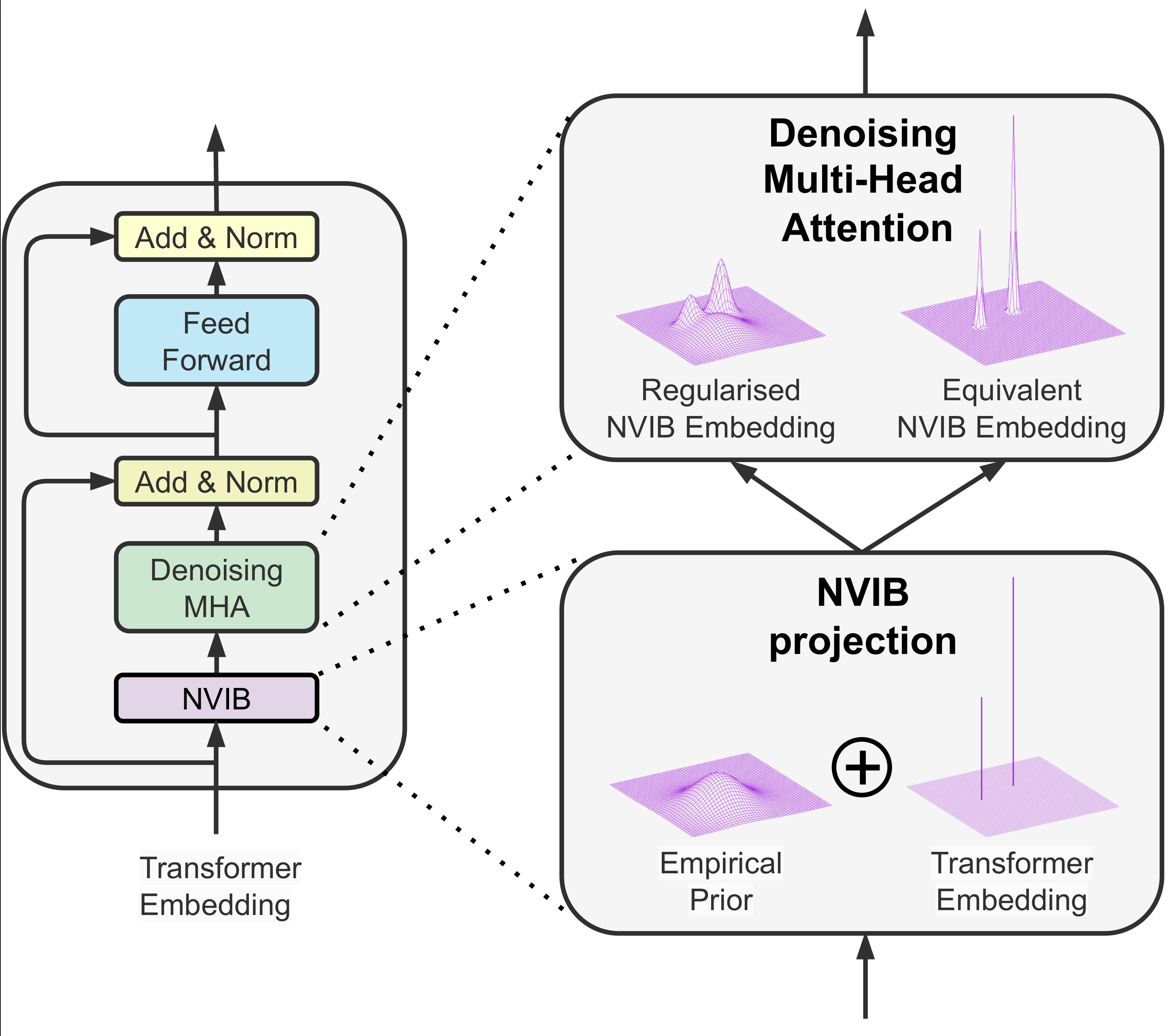

Nonparametric Variational Regularisation of Pretrained Transformers F. Fehr, J. Henderson ArXiv, 2023. (Paper) |

|

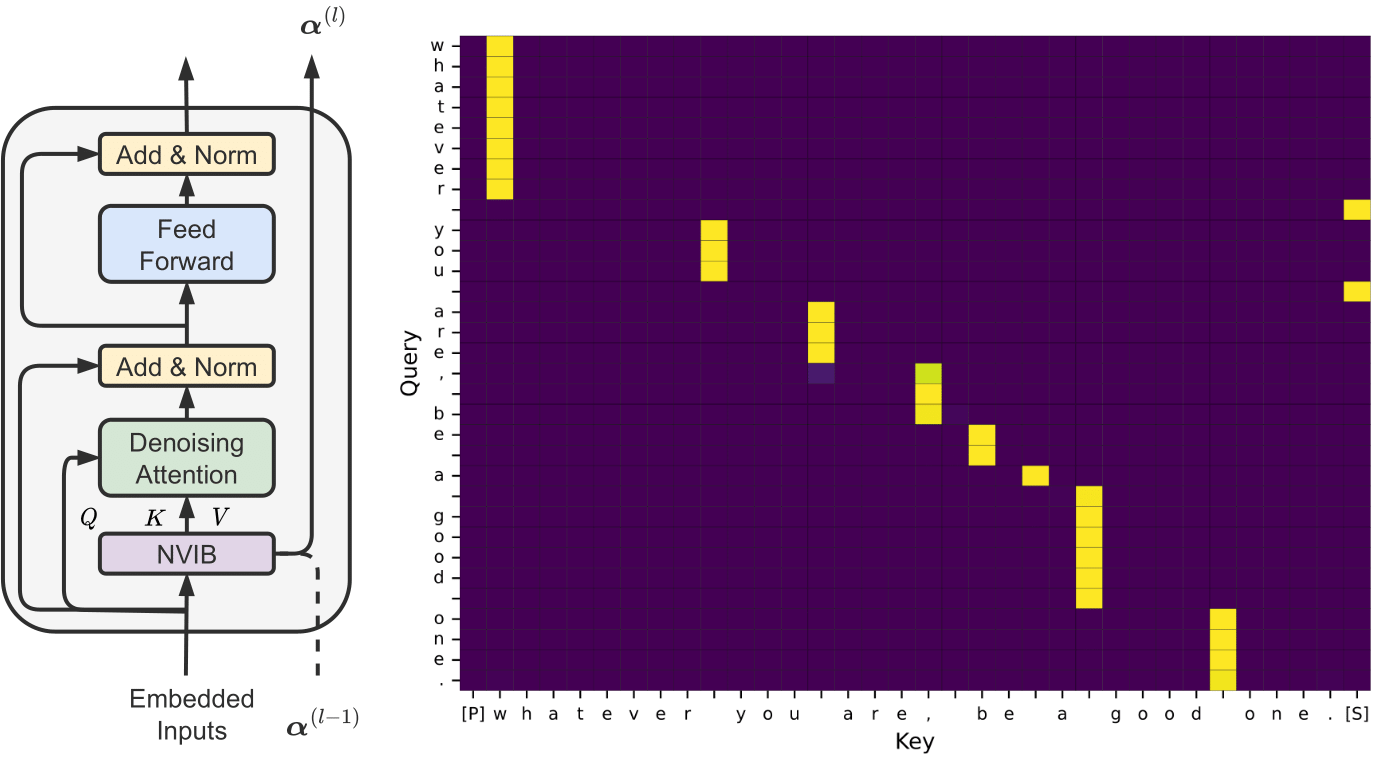

Learning to Abstract with Nonparametric Variational Information Bottleneck M. Behjati, F. Fehr, J. Henderson EMNLP, 2023 (Paper) (Demo) (Poster) (Code) |

|

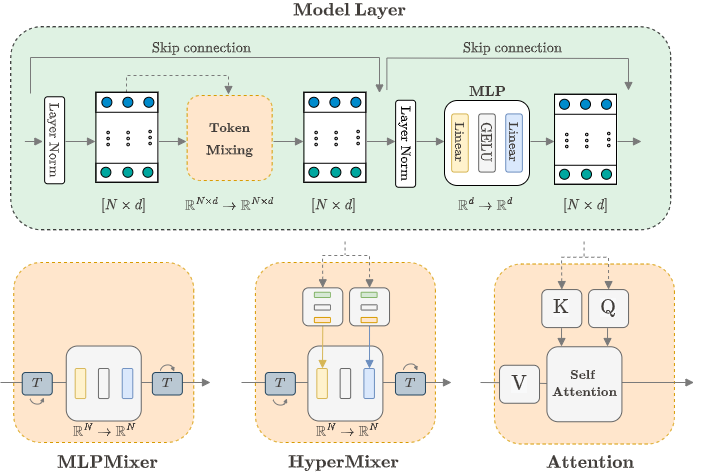

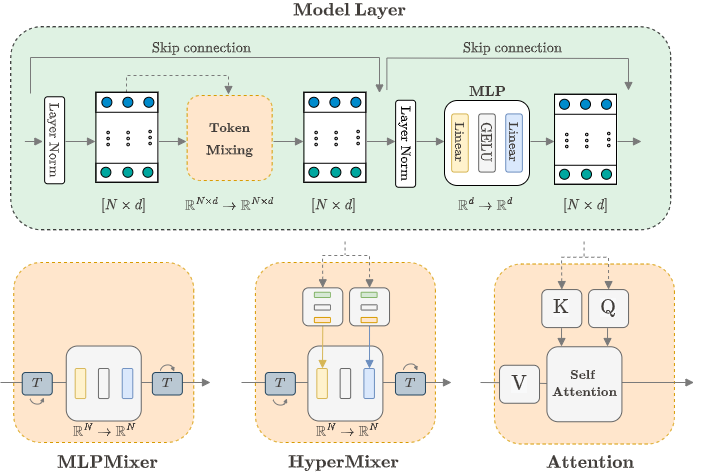

HyperMixer: An MLP-based Low Cost Alternative to Transformers F. Mai, A. Pannatier, F. Fehr, H. Chen, F. Marelli, F. Fleuret, J. Henderson ACL, 2023 (Paper) (Poster) (Code) |

|

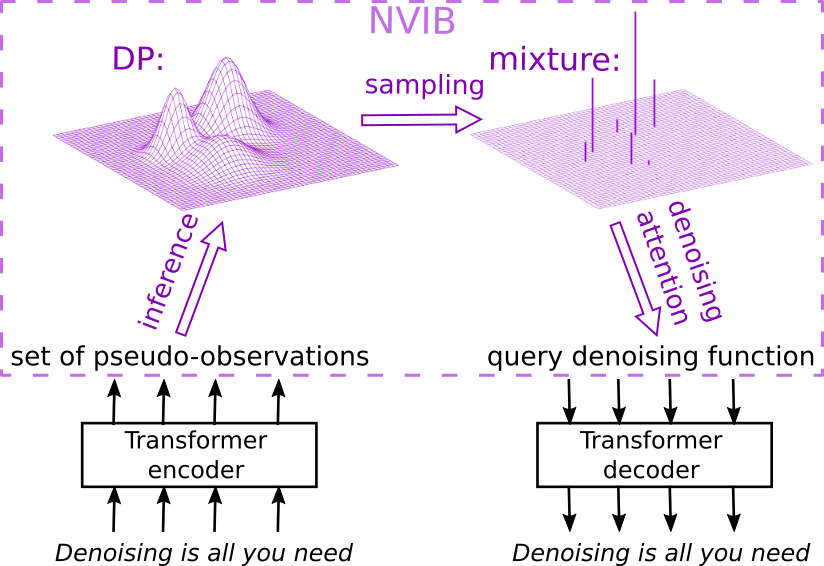

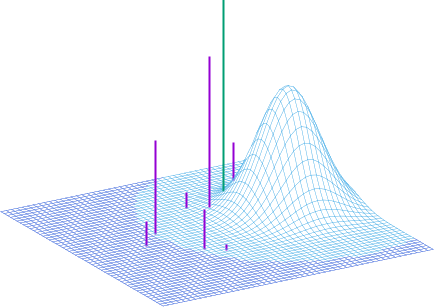

A Variational AutoEncoder for Transformers with Nonparametric Variational Information Bottleneck, J. Henderson, F. Fehr ICLR, 2023 (Paper) (Poster) (Code) |

2022

|

A Variational AutoEncoder for Transformers with Nonparametric Variational Information Bottleneck, J. Henderson, F. Fehr Arxiv, 2022 (Paper) |

|

HyperMixer: An MLP-based Green AI Alternative to Transformers, F. Mai, A. Pannatier, F. Fehr, H. Chen, F. Marelli, F. Fleuret, J. Henderson. Arxiv, 2022 (Paper) |

2020

|

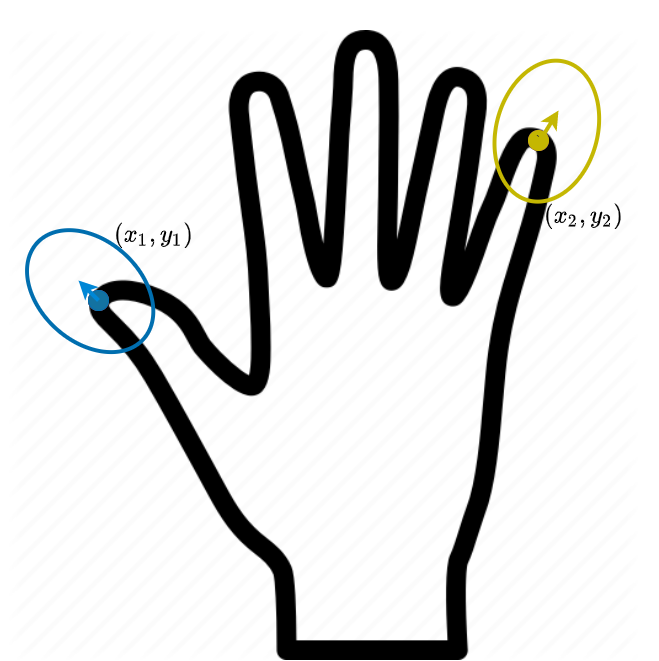

Modelling non-linearity in 3D shapes: A comparative study of Gaussian process morphable models and variational autoencoders for 3D shape data, F. Fehr OpenUCT MSc Thesis, 2020 (Paper) |

2018

|

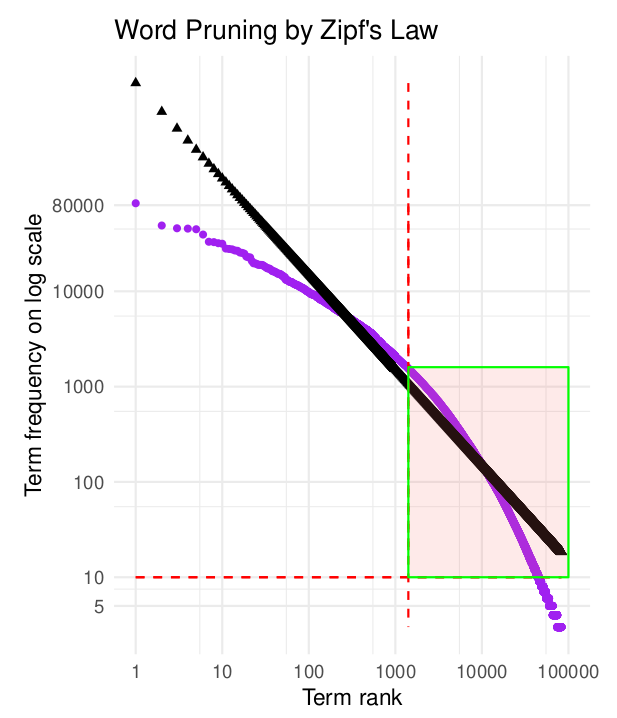

Text Content Classification on News Articles, F. Fehr S. Soutar UCT BBusSc Thesis, 2018. (Paper) |