Running DeepPixBiS on OULU and REPLAY-MOBILE Datasets¶

This section explains how to run a complete face PAD experiment using DeepPixBiS face PAD system, as well as a training work-flow.

The system discussed in this section is introduced the following publication [GM19]. It is strongly recommended to check the publication for better understanding of the described work-flow.

Note

For the experiments discussed in this section, the databases OULU-NPU and REPLAY-MOBILE needs to be downloaded and installed in your system. Please refer to Executing Baseline Algorithms section in the documentation of bob.pad.face package for more details on how to run the face PAD experiments and setup the databases.

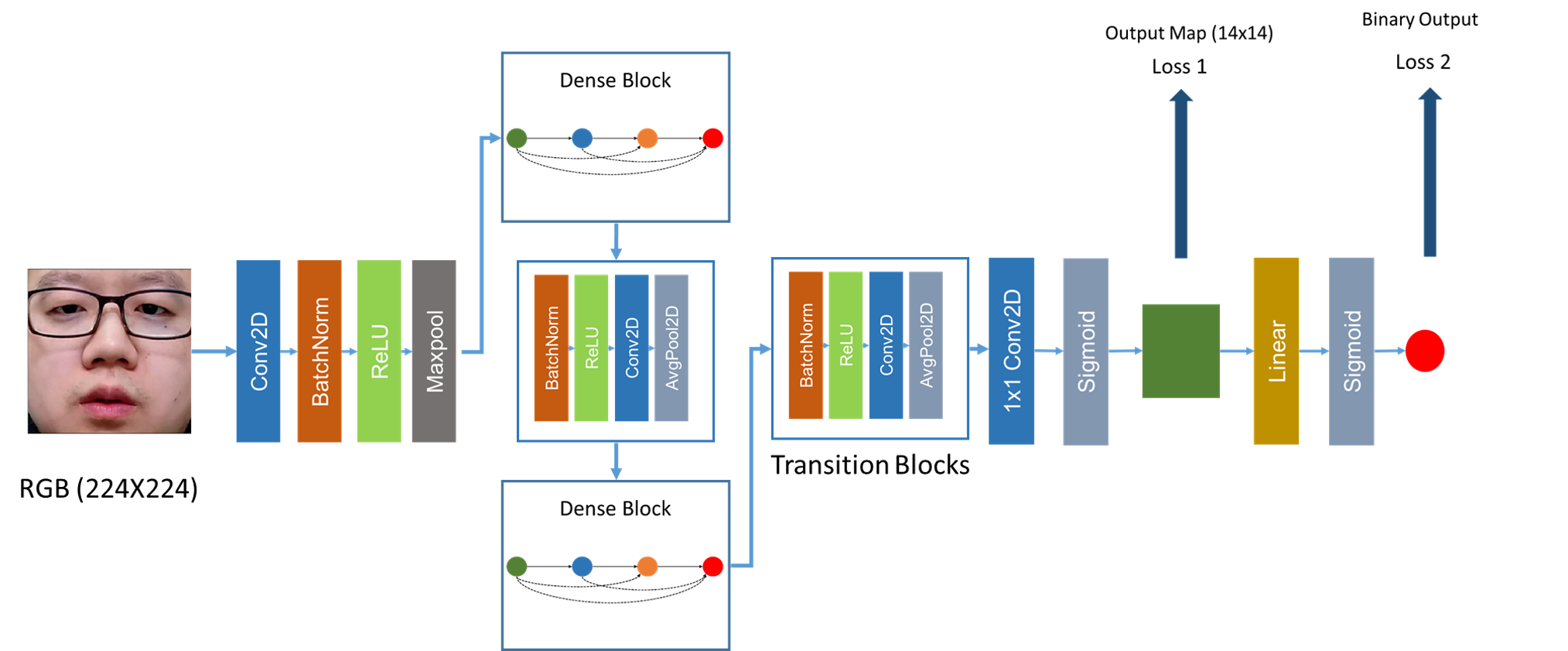

The architecture of the proposed framework is illustrated in the figure below

Fig. 2 Illustration of DeepPixBiS architecture.¶

Running experiments with DeepPixBiS architecture in OULU-NPU Protocol_1¶

As introduced in the paper [GM19], the training of the system is composed of three main steps.

Preprocessing the data, training the network, and running the pipeline.

1. Preprocessing data¶

The raw data needs to be preprocessed with face detection, alignment and cropping. The preprocessing can be achieved by the following command.

bin/spoof.py \ # spoof.py is used to run the preprocessor

oulunpu \ # run for OULU database

iqm-svm \ # required by spoof.py

--groups train dev eval \ # groups

--protocol Protocol_1 \ # Protocol to use

--execute-only preprocessing \ # execute only preprocessing step

--allow-missing-files \ # allow failed files

--grid idiap \ # use grid, only for Idiap users, REMOVE otherwise

--sub-directory <PATH_TO_STORE_THE_RESULTS> # define your path here

Similarly, run the scripts for all protocols in the OULU-NPU dataset. To run the experiments with different protocols

Replace the Protocol_1 line with different protocols in: ‘Protocol_1’, ‘Protocol_2’, ‘Protocol_3_1’, ‘Protocol_3_2’, ‘Protocol_3_3’, ‘Protocol_3_4’, ‘Protocol_3_5’, ‘Protocol_3_6’, ‘Protocol_4_1’, ‘Protocol_4_2’, ‘Protocol_4_3’, ‘Protocol_4_4’, ‘Protocol_4_5’, ‘Protocol_4_6’.

After running these commands, the preprocessed files from all the protocols will be stored in <PATH_TO_STORE_THE_RESULTS>/preprocessed/

2. Training DeepPixBiS model¶

After preprocessing is done, training the CNN for Protocol_1 can be done as follows.

Edit the config file cnn_trainer_config/oulu_deep_pixbis.py to set the preprocessed folder and output folders.

For other protocols change the value of protocol variable in the same config file and run training again.

Note

Be sure to update <PATH_TO_CONFIG>/cnn_trainer_config/oulu_deep_pixbis.py with the folder paths and protocol names.

bin/train_pixbis.py <PATH_TO_CONFIG>/cnn_trainer_config/oulu_deep_pixbis.py -vvv

Note

This can be done on a GPU, but you should then add the --use-gpu flag:

bin/train_pixbis.py <PATH_TO_CONFIG>/cnn_trainer_config/oulu_deep_pixbis.py -vvv --use-gpu

For Idiap Grid, the job can be submitted as follows:

jman submit --queue gpu \

--name deep_pixbis \

--log-dir <FOLDER_TO_SAVE_THE_RESULTS>/logs/ \

--environment="PYTHONUNBUFFERED=1" -- \

bin/train_pixbis.py \

<PATH_TO_CONFIG>/cnn_trainer_config/oulu_deep_pixbis.py \

--use-gpu \

-vv

Once this script is completed, the best model based on lowest validation loss can be found at <CNN_OUTPUT_DIR>/Protocol_1/model_0_0.pth.

3. Running the pipeline (PAD Experiment)¶

Once the CNN training is completed, we can run the bob pipeline by using the trained model as an extractor.

For Protocol_1 this can be done with the following command.

Note

Be sure to update <PATH_TO_CONFIG/deep_pix_bis.py> with the path to the model (MODEL_FILE) for the specific protocol.

This can be achieved by the following command:

bin/spoof.py \ # spoof.py is used to run the experiment

oulunpu \ # OULU NPU dataset

deep-pix-bis \ # configuration defining Preprocessor, Extractor, and Algorithm

--groups train dev eval \ # groups

--protocol Protocol_1 \ # Define the configuration

--allow-missing-files \ # don't stop the execution if some files are missing

--grid idiap \ # use grid, only for Idiap users, REMOVE otherwise

--sub-directory <FOLDER_TO_SAVE_THE_RESULTS> # define your path here

4. Evaluating results of face PAD Experiments¶

The scores obtained can be tested as well.

bin/scoring_acer.py -df \

<FOLDER_TO_SAVE_THE_RESULTS>/Protocol_1/scores/scores-dev \

-ef <FOLDER_TO_SAVE_THE_RESULTS>/Protocol_1/scores/scores-eval \

-l "DeepPixBiS" -s results \

Running experiments with DeepPixBiS architecture in REPLAY_MOBILE grandtest protocol¶

1. Preprocessing data¶

The raw data needs to be preprocessed with face detection, alignment and cropping. The preprocessing can be achieved by the following command.

bin/spoof.py \ # spoof.py is used to run the preprocessor

replay-mobile \ # run for RM database

iqm-svm \ # required by spoof.py, but unused

--groups train dev eval \ # groups

--protocol grandtest \ # Protocol to use

--execute-only preprocessing \ # execute only preprocessing step

--allow-missing-files \ # allow failed files

--grid idiap \ # use grid, only for Idiap users, REMOVE otherwise

--sub-directory <RM_PATH_TO_STORE_THE_RESULTS> # define your path here

After running these commands, the preprocessed files from all the protocols will be stored in <RM_PATH_TO_STORE_THE_RESULTS>/preprocessed/

2. Training DeepPixBiS model¶

After preprocessing is done, training the CNN for grandtest can be done as follows.

Edit the config file cnn_trainer_config/rm_deep_pixbis.py to set the preprocessed folder and output folders.

Note

Be sure to update <PATH_TO_CONFIG>/cnn_trainer_config/rm_deep_pixbis.py with the folder paths and protocol names.

bin/train_pixbis.py <PATH_TO_CONFIG>/cnn_trainer_config/rm_deep_pixbis.py -vvv

Note

This can be done on a GPU, but you should then add the --use-gpu flag:

bin/train_pixbis.py <PATH_TO_CONFIG>/cnn_trainer_config/rm_deep_pixbis.py -vvv --use-gpu

For Idiap Grid, the job can be submitted as follows:

jman submit --queue gpu \

--name deep_pixbis \

--log-dir <FOLDER_TO_SAVE_THE_RESULTS>/logs/ \

--environment="PYTHONUNBUFFERED=1" -- \

bin/train_pixbis.py \

<PATH_TO_CONFIG>/cnn_trainer_config/rm_deep_pixbis.py \

--use-gpu \

-vv

Once this script is completed, the best model based on lowest validation loss can be found at <CNN_OUTPUT_DIR>/grandtest/model_0_0.pth.

3. Running the pipeline (PAD Experiment)¶

Once the CNN training is completed, we can run the bob pipeline by using the trained model as an extractor.

For grandtest this can be done with the following command.

Note

Be sure to update <PATH_TO_CONFIG/deep_pix_bis.py> with the path to the model (MODEL_FILE) for the specific protocol.

This can be achieved by the following command:

bin/spoof.py \ # spoof.py is used to run the experiment

replay-mobile \ # RM dataset

deep-pix-bis \ # configuration defining Preprocessor, Extractor, and Algorithm

--groups train dev eval \ # groups

--protocol grandtest \ # Define the configuration

--allow-missing-files \ # don't stop the execution if some files are missing

--grid idiap \ # use grid, only for Idiap users, REMOVE otherwise

--sub-directory <RM_FOLDER_TO_SAVE_THE_RESULTS> # define your path here

4. Evaluating results of face PAD Experiments¶

The scores obtained can be tested as well.

bob pad metrics \

<RM_FOLDER_TO_SAVE_THE_RESULTS>/grandtest/scores/scores-dev \

-ef <RM_FOLDER_TO_SAVE_THE_RESULTS>/grandtest/scores/scores-eval \

-e

Note

Add the groups argument in all the protocols.

Running cross DB experiments with DeepPixBiS¶

1. Trained on OULU tested on RM¶

Here the model trained on Protocol_1 of OULU is tested on grandtest protocol in REPLAY-MOBILE.

We reuse the models trained in the first two sets of experiments.

This can be achieved by modifying the MODEL_FILE in <PATH_TO_CONFIG/deep_pix_bis.py> with the model obtained for

Protocol_1 in OULU, and running the following code

bin/spoof.py \ # spoof.py is used to run the experiment

replay-mobile \ # RM dataset

deep-pix-bis \ # configuration defining Preprocessor, Extractor, and Algorithm

--groups train dev eval \ # groups

--protocol grandtest \ # Define the configuration

--allow-missing-files \ # don't stop the execution if some files are missing

--grid idiap \ # use grid, only for Idiap users, REMOVE otherwise

--sub-directory <OULU_ON_RM_FOLDER_TO_SAVE_THE_RESULTS> # define your path here

2. Trained on RM tested on OULU¶

Here the model trained on grandtest protocol in REPLAY-MOBILE is tested on Protocol_1 of OULU.

This can be achieved by modifying the MODEL_FILE in <PATH_TO_CONFIG/deep_pix_bis.py> with the model obtained for

grandtest in RM, and running the following code

bin/spoof.py \ # spoof.py is used to run the experiment

oulunpu \ # OULU dataset

deep-pix-bis \ # configuration defining Preprocessor, Extractor, and Algorithm

--groups train dev eval \ # groups

--protocol Protocol_1 \ # Define the configuration

--allow-missing-files \ # don't stop the execution if some files are missing

--grid idiap \ # use grid, only for Idiap users, REMOVE otherwise

--sub-directory <RM_ON_OULU_FOLDER_TO_SAVE_THE_RESULTS> # define your path here

3. Evaluating results of cross database experiments¶

For testing the results of Trained on OULU tested on RM

bob pad metrics \

<OULU_ON_RM_FOLDER_TO_SAVE_THE_RESULTS>/grandtest/scores/scores-dev \

-ef <OULU_ON_RM_FOLDER_TO_SAVE_THE_RESULTS>/grandtest/scores/scores-eval \

-e

For testing the results of Trained on RM tested on OULU

bob pad metrics \

<RM_ON_OULU_FOLDER_TO_SAVE_THE_RESULTS>/Protocol_1/scores/scores-dev \

-ef <RM_ON_OULU_FOLDER_TO_SAVE_THE_RESULTS>/Protocol_1/scores/scores-eval \

-e

Using pretrained models¶

Warning

The training of models have some randomness associated with even with all the seeds set. The variations could arise from the platforms, versions of pytorch, non-deterministic nature in GPUs and so on. You can go through the follwing link on how to achive best reproducibility in PyTorch PyTorch Reproducibility. If you wish to reproduce the exact same results in the paper, we suggest you to use the pretrained models shipped with the package.

The pretrained models are located at Link Pretrained Models. Unzip the files and use the models provided in the folder for different protocols. The models names are in format <DATABASE>_<PROTOCOL>_model_0_0.pth. Models trained on both OULU and RM are provided. To use the pretrained models, replace the MODEL_FILE variable in and point to ones in the unzipped folder in pix_bis config and run the pipeline.