Evrard, P., Gribovskaya, E., Calinon, S., Billard, A. and Kheddar, A. (2009)

Teaching physical collaborative tasks: Object-lifting case study with a humanoid

In Proc. of the IEEE-RAS Intl Conf. on Humanoid Robots (Humanoids), Paris, France, pp. 399-404.

Abstract

This paper presents the application of a statistical framework that allows to endow a humanoid robot with the ability to perform a collaborative manipulation task with a human operator. We investigate to what extent the dynamics of the motion and the haptic communication process that takes place during physical collaborative tasks can be encapsulated by the probabilistic model. This framework encodes the dataset in a Gaussian Mixture Model, which components represent the local correlations across the variables that characterize the task. A set of demonstrations is performed using a bilateral coupling teleoperation setup; then the statistical model is trained in a pure follower/leader role distribution mode between the human and robot alternatively. The task is reproduced using Gaussian Mixture Regression. We present the probabilistic model and the experimental results obtained on the humanoid platform HRP- 2; preliminary results assess our theory on switching behavior modes in dyad collaborative tasks: when reproduced with users which were not instructed to behave in either a follower or a leader mode, the robot switched automatically between the learned leader and follower behaviors.

Bibtex reference

@inproceedings{Evrard09Hum,

author = "P. Evrard and E. Gribovskaya and S. Calinon and A. Billard and A. Kheddar",

title = "Teaching physical collaborative tasks:

Object-lifting case study with a humanoid",

booktitle = "Proc. {IEEE-RAS} Intl Conf. on Humanoid Robots ({H}umanoids)",

year = "2009",

month="December",

location="Paris, France",

pages="399--404"

}

Video

Learning of a collaborative manipulation skill with HRP-2 by

showing multiple demonstrations of the skill in slightly different

situations (different initial position and orientation of the

object). The robot is standing in an half-sitting posture during the

experiment, where the 7 DOFs of the right arm and torso are used

in the experiment. A built-in stereoscopic vision is used to track

colored patches, and a 6-axes force sensor at the level of the

right wrist is used to track the interaction forces with the

environment during task execution. The robot is

teleoperated through a Phantom Desktop haptic device from

Sensable Technologies. An impedance controller is used to

control the robot.

This work is in collaboration with the Joint Japanese-French Robotics Laboratory (JRL) at

AIST, Tsukuba, Japan.

Source codes

Download

Â

Download GMR Dynamics sourcecode

Â

Download GMR Dynamics sourcecode

Usage

Unzip the file and run 'demo1' in Matlab.

Reference

- Calinon, S., D'halluin, F., Sauser, E.L., Caldwell, D.G. and Billard, A.G. (2009) A probabilistic approach based on dynamical systems to learn and reproduce gestures by imitation. IEEE Robotics and Automation Magazine.

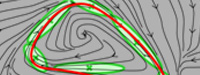

Demo 1 - Demonstration of a trajectory learning system robust to perturbation based on Gaussian Mixture Regression (GMR)

This program first encodes a trajectory represented through time 't', position 'x' and velocity 'dx'

in a joint distribution P(t,x,dx) through Gaussian Mixture

Model (GMM) by using Expectation-Maximization (EM) algorithm. Gaussian Mixture Regression (GMR)

is then used to estimate P(x,dx|t), which retrieves another GMM refining the joint distribution

model of position and velocity.

The learned skill can then be reproduced by combining an estimation of P(dx|x)

with an attractor to the demonstrated trajectories.