|

|

| clean document | |

| |

| uniform noise (noise 0) | |

| |

| gaussian temporal noise (noise 1) |

This page contains additional material associated to the submission “A Sparsity Constraint for Topic Models - Application to Temporal Activity Mining” at the NIPS-2010 Workshop on Practical Applications of Sparse Modeling: Open Issues and New Directions. It contains:

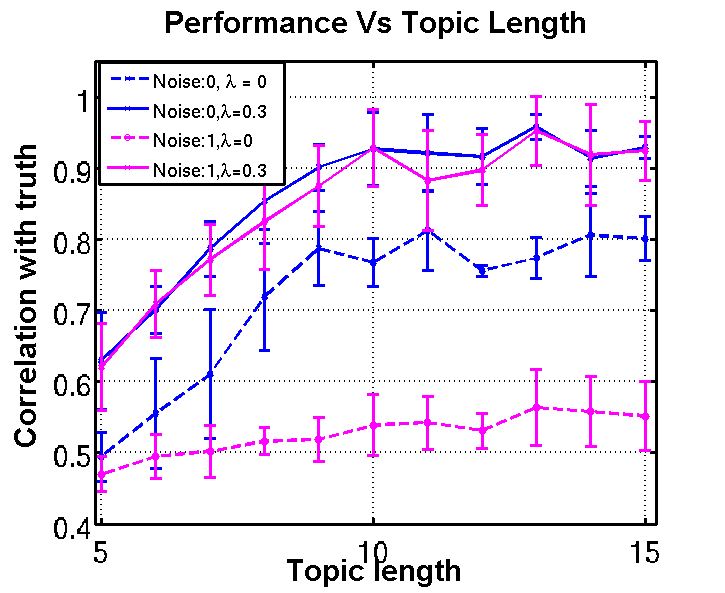

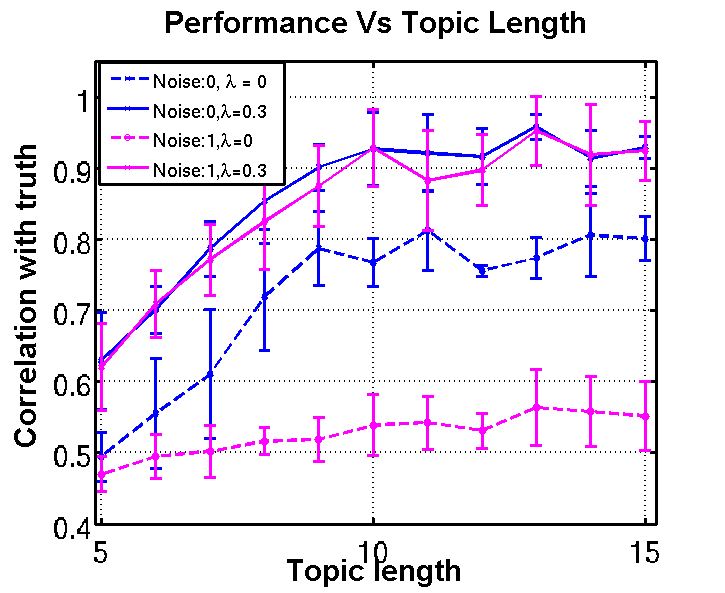

With two kinds of noise (0: words added at uniform places in the document, 1: gaussian perturbation of the time occurrence of each word), we can see how well motifs are recovered with and without sparsity. When the motif length parameter becomes lower than the actual motif duration, the recovered motifs are truncated versions of the original ones, and the 'missing' parts are captured elsewhere, resulting in a correlation decrease. On the other hand, longer temporal windows do not really affect the learning, even under noisy conditions. However, the performance under clean and noisy conditions are significantly worse with no sparsity constraint.

|

|

| clean document | |

| |

| uniform noise (noise 0) | |

| |

| gaussian temporal noise (noise 1) |

We can visually compare the motifs recovered when we ask for less motifs (3), for the exact number (5, in which case the recovery is perfect) and more motifs (6). With less motifs, multiple original motifs get captured by a single recovered motifs. With more recovered motifs, original motifs get duplicated.

We can visually compare the motifs recovered from PLSM (on the left) and with TOS-LDA (on the right). The topics recovered by PLSM are actually indistinguishable from the ones used to generate the synthetic documents. On the other hand, due to the method’s inherent lack of alignment ability, none of the five topics recovered by the TOS-model truly represents one of the five patterns used to create the documents.

|

|

| Our method. | TOS-LDA |

Two settings are reported in the article for the unsupervised extraction of activity patterns in videos. You can find some extracts of the videos:

To get a better idea of what our model extracts, here are some recovered motifs on these videos.

We show here some corresponding recovered temporal motifs with and without sparsity. We show two representations:

|

|

| (click image to replay) | (click image to replay) |

| without sparsity | with sparsity |

| Two motifs of cars going from the top to the bottom-right. | |

|

|

| (click image to replay) | (click image to replay) |

| without sparsity | with sparsity |

| Two motifs of cars going from the bottom-right to the top. | |

You can obtain the video of the event detection task [avi] [ogv]. This video shows how recovered motifs can be interpreted as events and how we can use this interpretation to detect these events. The upper right quadrant shows the inferred starting time of the two motifs represented by the arrows on the image below.